Paul Boddie's Free Software-related blog

Paul's activities and perspectives around Free Software

Porting L4Re and Fiasco.OC to the Ben NanoNote (Part 6)

March 26th, 2018

With all the previous effort adjusting Fiasco.OC and the L4Re bootstrap code, I had managed to get the Ben NanoNote to run some code, but I had encountered a problem entering the kernel. When an instruction was encountered that attempted to clear the status register, it would seem that the boot process would not get any further. The status register controls things like which mode the processor is operating in, and certain modes offer safety guarantees about how much else can go wrong, which is useful when they are typically entered when something has already gone wrong.

When starting up, a MIPS-based processor typically has the “error level” (ERL) flag set in the status register, meaning that normal operations are suspended until the processor can be configured. The processor will be in kernel mode and it will bypass the memory mapping mechanisms when reading from and writing to memory addresses. As I found out in my earlier experiments with the Ben, running in kernel mode with ERL set can mask problems that may then emerge when unsetting it, and clearing the status register does precisely that.

With the kernel’s _start routine having unset ERL, accesses to lower memory addresses will now need to go through a memory mapping even though kernel mode still applies, and if a memory mapping hasn’t been set up then exceptions will start to occur. This tripped me up for quite some time in my previous experiments until I figured out that a routine accessing some memory in an apparently safe way was in fact using lower memory addresses. This wasn’t a problem with ERL set – the processor wouldn’t care and just translate them directly to physical memory – but with ERL unset, the processor now wanted to know how those addresses should really be translated. And since I wasn’t handling the resulting exception, the Ben would just hang.

Debugging the Debugging

I had a long list of possible causes, some more exotic than others: improperly flushed caches, exception handlers in the wrong places, a bad memory mapping configuration. I must admit that it is difficult now, even looking at my notes, to fully review my decision-making when confronting this problem. I can apply my patches from this time and reproduce a situation where the Ben doesn’t seem to report any progress from within the kernel, but with hindsight and all the progress I have made since, it hardly seems like much of an obstacle at all.

Indeed, I have already given the game away in what I have already written. Taking over the framebuffer involves accessing some memory set up by the bootloader. In the bootstrap code, all of this memory should actually be mapped, but since ERL is set I now doubt that this mapping was even necessary for the duration of the bootstrap code’s execution. And I do wonder whether this mapping was preserved once the kernel was started. But what appeared to happen was that when my debugging code tried to load data from the framebuffer descriptor, it would cause an exception that would appear to cause a hang.

Since I wanted to make progress, I took the easy way out. Rather than try and figure out what kind of memory mapping would be needed to support my debugging activities, I simply wrapped the code accessing the framebuffer descriptor and the framebuffer itself with instructions that would set ERL and then unset it afterwards. This would hopefully ensure that even if things weren’t working, then at least my things weren’t making it all worse.

Lost in Translation

It now started to become possible to track the progress of the processor through the kernel. From the _start routine, the processor jumps to the kernel_main function (in kernel/fiasco/src/kern/mips/main.cpp) and starts setting things up. As I was quite sure that the kernel wasn’t functioning correctly, it made sense to drop my debugging code into various places and see if execution got that far. This is tedious work – almost a form of inefficient “single-stepping” – but it provides similar feedback about how the code is behaving.

Although I had tried to do a reasonable job translating certain operations to work on the JZ4720 used by the Ben, I was aware that I might have made one or two mistakes, also missing areas where work needed doing. One such area appeared in the Cpu class implementation (in kernel/fiasco/src/kern/mips/cpu-mips.cpp): a rich seam of rather frightening-looking processor-related initialisation activities. The Cpu::first_boot method contained this ominous-looking code:

require(c.r<0>().ar() > 0, "MIPS r1 CPUs are notsupported\n");

I hadn’t noticed this earlier, and its effect is to terminate the kernel if it detects an architecture revision just like the one provided by the JZ4720. There were a few other tests of the capabilities of the processor that needed to be either disabled or reworked, and I spent some time studying the documentation concerning configuration registers and what they can tell programs about the kind of processor they are running on. Amusingly, our old friend the rdhwr instruction is enabled for user programs in this code, but since the JZ4720 has no notion of that instruction, we actually disable the instruction that would switch rdhwr on in this way.

Another area that proved to be rather tricky was that of switching interrupts on and having the system timer do its work. Early on, when laying the groundwork for the Ben in the kernel, I had made a rough translation of the CI20 code for the Ben, and we saw some of these hardware details a few articles ago. Now it was time to start the timer, enable interrupts, and have interrupts delivered as the timer counter reaches its limit. The part of the kernel concerned is Kernel_thread::bootstrap (in kernel/fiasco/src/kern/kernel_thread.cpp), where things are switched on and then we wait in a delay loop for the interrupts to cause a variable to almost magically change by itself (in kernel/fiasco/src/drivers/delayloop.cpp):

Cpu_time t = k->clock; Timer::update_timer(t + 1000); // 1ms while (t == (t1 = k->clock)) Proc::pause();

But the processor just got stuck in this loop forever! Clearly, I hadn’t done everything right. Some investigation confirmed that various timer registers were being set up correctly, interrupts were enabled, but that they were being signalled using the “interrupt pending 2” (IP2) flag of the processor’s “cause” register which reports exception and interrupt conditions. Meanwhile, I had misunderstood the meaning of the number in the last statement of the following code (from kernel/fiasco/src/kern/mips/bsp/qi_lb60/mips_bsp_irqs-qi_lb60.cpp):

_ic[0] = new Boot_object<Irq_chip_ingenic>(0xb0001000); m->add_chip(_ic[0], 0); auto *c = new Boot_object<Cascade_irq>(nullptr, ingenic_cascade); Mips_cpu_irqs::chip->alloc(c, 1);

Here is how the CI20 had been set up (in kernel/fiasco/src/kern/mips/bsp/ci20/mips_bsp_irqs-ci20.cpp):

_ic[0] = new Boot_object<Irq_chip_ingenic>(0xb0001000); m->add_chip(_ic[0], 0); _ic[1] = new Boot_object<Irq_chip_ingenic>(0xb0001020); m->add_chip(_ic[1], 32); auto *c = new Boot_object<Cascade_irq>(nullptr, ingenic_cascade); Mips_cpu_irqs::chip->alloc(c, 2);

With virtually no knowledge of the details, and superficially transcribing the code, editing here and there, I had thought that the argument to the alloc method referred to the number of “chips” (actually the number of registers dedicated to interrupt signals). But in fact, it indicates an adjustment to what the kernel code calls a “pin”. In the Mips_cpu_irq_chip class (in kernel/fiasco/src/kern/mips/mips_cpu_irqs.cpp), we actually see that this number is used to indicate the IP flag that will need to be tested. So, the correct argument to the alloc method is 2, not 1, just as it is for the CI20:

Mips_cpu_irqs::chip->alloc(c, 2);

This fix led to another problem and the discovery of another area that I had missed: the “exception base” gets redefined in the kernel (in src/kern/mips/cpu-mips.cpp), and so I had to make sure it was consistent with the other places I had defined it by changing its value in the kernel (in src/kern/mips/mem_layout-mips32.cpp). I mentioned this adjustment previously, but it was at this stage that I realised that the exception base gets set in the kernel after all. (I had previously set it in the bootstrap code to override the typical default of 0x80000000.)

Leaving the Kernel

Although we are not quite finished with the kernel, the next significant obstacle involved starting programs that are not part of the kernel. Being a microkernel, Fiasco.OC needs various other things to offer the kind of environment that a “monolithic” operating system kernel might provide. One of these is the sigma0 program which has responsibilities related to “paging” or allocating memory. Attempting to start such programs exercises how the kernel behaves when it hands over control to these other programs. We should expect that timer interrupts should periodically deliver control back to the kernel for it to do work itself, this usually involving giving other programs a chance to run.

I was seeing the kernel in the “home straight” with regard to completing its boot activities. The Kernel_thread::init_workload method (in kernel/fiasco/src/kern/kernel_thread-std.cpp) was pretty much the last thing to be invoked in the Kernel_thread::run method (in kernel/fiasco/src/kern/kernel_thread.cpp) before normal service begins. But it was upon attempting to “activate” a thread to run sigma0 that a problem arose: the kernel never got control back! This would be my last major debugging exercise before embarking on a final excursion into “user space”.

Porting L4Re and Fiasco.OC to the Ben NanoNote (Part 5)

March 25th, 2018

We left off last time with the unenviable task of debugging a non-working system. In such a situation the outlook can seem bleak, but I mentioned a couple of strategies that can sometimes rescue the situation. The first of these is to rule out areas of likely problems, which in my case tends to involve reviewing what I have done and seeing if I have made some stupid mistakes. Naturally, it helps to have a certain amount of experience to inform this process; otherwise, practically everything might be a place where such mistakes may be lurking.

One thing that bothered me was the use of the memory map by Fiasco.OC on the Ben NanoNote. When deploying my previous experimental work to the Ben, I had become aware of limitations around where things might be stored, at least while any bootloader might be active. Care must be taken to load new code away from memory already being used, and it seems that the base of memory must also be avoided, at least at first. I wasn’t convinced that this avoidance was going to happen with the default configuration of the different components.

The Memory Map

Of particular concern were the exception vectors – where the processor jumps to if an exception or interrupt occurs – whose defaults in Fiasco.OC situate them at the base of kernel memory: 0x80000000. If the bootloader were to try and copy the code that handles exceptions to this region, I rather suspected that it would immediately cause problems.

I was also unsure whether the bootloader was able to load the payload from the MMC/MicroSD card into memory without overwriting itself or corrupting the payload as it copied things around in memory. According to the boot script that seems to apply to the Ben, it loads the payload into memory at 0x80600000:

#define CONFIG_BOOTCOMMANDFROMSD "mmc init; ext2load mmc 0 0x80600000 /boot/uImage; bootm"

Meanwhile, the default memory settings for L4Re has code loaded rather low in the kernel address space at 0x802d0000. Without really knowing what happens, I couldn’t be sure that something might get copied to that location, that the copied data might then run past 0x80600000, and this might overwrite some other thing – the kernel, perhaps – that hadn’t been copied yet. Maybe this was just paranoia, but it was at least something that could be dealt with. So I came up with some alternative arrangements:

0x81401000 exception handlers 0x81400000 kernel load address 0x80d00000 bootstrap start address 0x80600000 payload load address when copied by bootm

I wanted to rule out memory conflicts but try and not conjure up more exotic solutions than strictly necessary. So I made some adjustments to the location of the kernel, keeping the exception vectors in the same place relative to the kernel, but moving the vectors far away from the base of memory. It turns out that there are quite a few places that need changing if you do this:

- A configuration setting, CONFIG_KERNEL_LOAD_ADDR-32, in the kernel build scripts (in kernel/fiasco/src/Modules.mips)

- The exception base, EXC_BASE, in the kernel’s linker script (in kernel/fiasco/src/kernel.mips.ld)

- The exception base, Exception_base, in a description of the kernel memory layout (in kernel/fiasco/src/kern/mips/mem_layout-mips32.cpp)

- The exception base, if it is mentioned in the bootstrap initialisation (in l4/pkg/bootstrap/server/src/ARCH-mips/crt0.S)

The location of the rest of the payload seems to be configured by just changing DEFAULT_RELOC_mips32 in the bootstrap package’s build scripts (in l4/pkg/bootstrap/server/src/Make.rules).

With this done, I had hoped that I might have “moved the needle” a little and provoked a visible change when attempting to boot the system, but this was always going to be rather optimistic. Having pursued the first strategy, I now decided to pursue the second.

Update: it turns out that a more conventional memory arrangement can be used, and this is described in the summary article.

Getting in at the Start

The second strategy is to use every opportunity to get the device to show what it is doing. But how can we achieve this if we cannot boot the kernel and start some code that sets up the framebuffer? Here, there were two things on my side: the role of the bootstrap code, it being rather similar to code I have written before, and the state of the framebuffer when this code is run.

I had already discovered that provided that the code is loaded into a place that can be started by the bootloader, then the _start routine (in l4/pkg/bootstrap/server/src/ARCH-mips/crt0.S) will be called in kernel mode. And I had already looked at this code for the purposes of identifying instructions that needed rewriting as well as for setting the “exception base”. There were a few other precautions that were worth taking here before we might try and get the code to show its activity.

For instance, the code present that attempts to enable a floating point unit in the processor does not apply to the Ben, so this was disabled. I was also unconvinced that the memory mapping instructions would work on the Ben: the JZ4720 does not seem to support memory pages of 256MB, with the Ben only having 32MB anyway, so I changed this to use 16MB pages instead. This must be set up correctly because any wandering into unmapped memory – visiting bad addresses – cannot be rectified before the kernel is active, and the whole point of the bootstrap code is to get the kernel active!

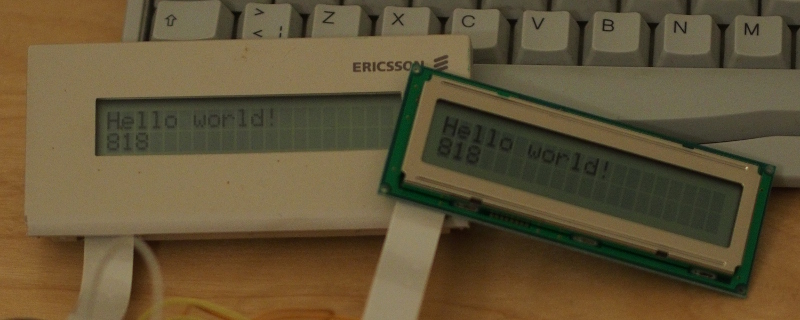

Now, it wasn’t clear just how far the processor was getting through this code before failing somewhere, but this is where the state of the framebuffer comes in. On the Ben, the bootloader initialises the framebuffer in order to show the state of the device, indicate whether it found a payload to load and boot from, complain about error conditions, and so on. It occurred to me that instead of trying to initialise a framebuffer by programming the LCD peripheral in the JZ4720, set up various structures in memory, decide where these structures should even be situated, I could just read the details of the existing framebuffer from the LCD peripheral’s registers, then find out where the framebuffer resides, and then just write whatever data I liked to the framebuffer in order to communicate with the outside world.

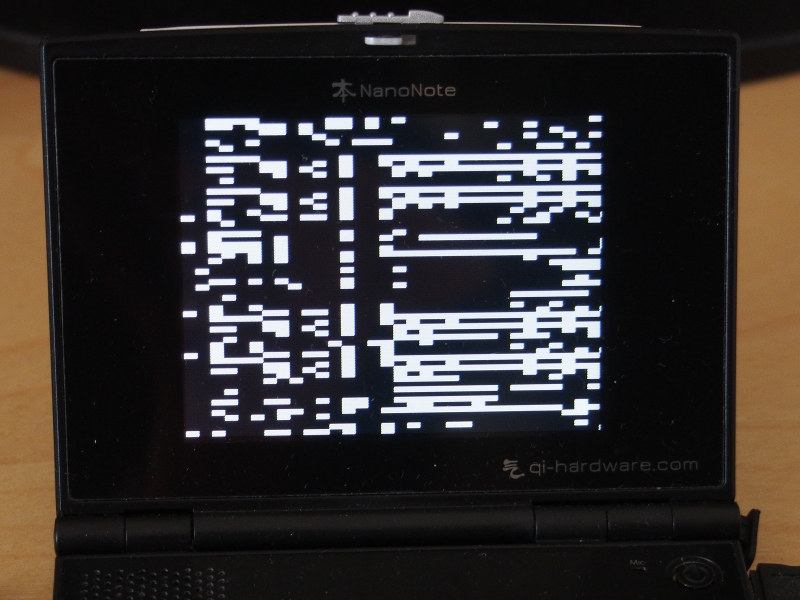

So, I would just need to write a few lines of assembly language, slip it into the bootstrap code, and then see if the framebuffer was changed and the details of interest written to the Ben’s display. Here is a fragment of code in a form that would become rather familiar after a time:

li $8, 0xb3050040 /* LCD_DA0 */ lw $9, 0($8) /* &descriptor */ lw $10, 4($9) /* fsadr = descriptor[1] */ lw $11, 12($9) /* ldcmd = descriptor[3] */ li $8, 0x00ffffff and $11, $8, $11 /* size = ldcmd & LCD_CMD0_LEN */ li $9, 0xa5a5a5a5 1: sw $9, 0($10) /* *fsadr = ... */ addiu $11, $11, -1 /* size -= 1 */ addiu $10, $10, 4 /* fsadr += 4 */ bnez $11, 1b /* until size == 0 */ nop

To summarise, it loads the address of a “descriptor” from a configuration register provided by the LCD peripheral, this register having been set by the bootloader. It then examines some members of the structure provided by the descriptor, notably the framebuffer address (fsadr) and size (a subset of ldcmd). Just to show some sign of progress, the code loops and fills the screen with a specific value, in this case a shade of grey.

By moving this code around in the bootstrap initialisation routine, I could see whether the processor even managed to get as far as this little debugging fragment. Fortunately for me, it did get run, the screen did turn grey, and I could then start to make deductions about why it only got so far but no further. One enhancement to the above that I had to make after a while was to temporarily change the processor status to “error level” (ERL) when accessing things like the LCD configuration. Not doing so risks causing errors in itself, and there is nothing more frustrating than chasing down errors only to discover that the debugging code caused these errors and introduced them as distractions from the ones that really matter.

Enter the Kernel

The bootstrap code isn’t all assembly language, and at the end of the _start routine, the code attempts to jump to __main. Provided this works, the processor enters code that started out life as C++ source code (in l4/pkg/bootstrap/server/src/ARCH-mips/head.cc) and hopefully proceeds to the startup function (in l4/pkg/bootstrap/server/src/startup.cc) which undertakes a range of activities to prepare for the kernel.

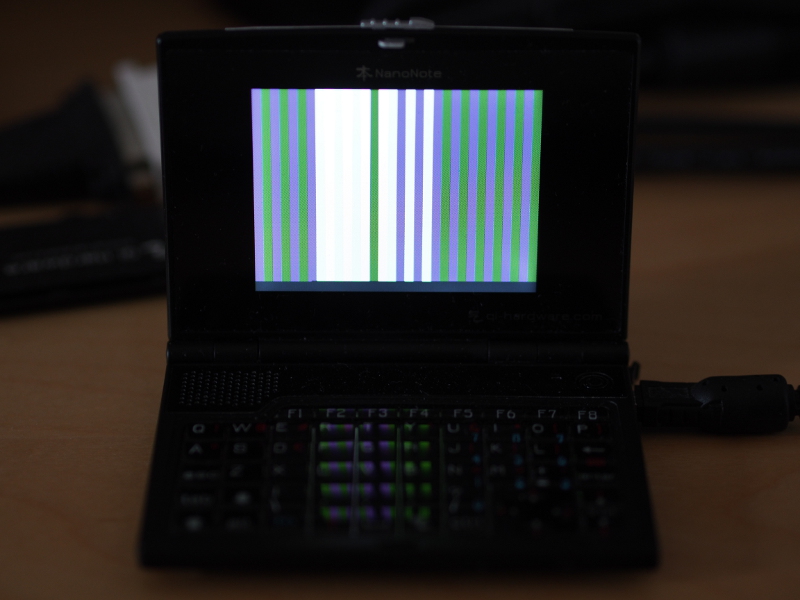

Here, my debugging routine changed form slightly, minimising the assembly language portion and replacing the simple screen-clearing loop with something in C++ that could write bit patterns to the screen. It became interesting to know what address the bootstrap code thought it should be using for the kernel, and by emitting this address’s bit pattern I could check whether the code had understood the structure of the payload. It seemed that the kernel was being entered, but upon executing instructions in the _start routine (in kernel/fiasco/src/kern/mips/crt0.S), it would hang.

The Ben NanoNote showing a bit pattern on the screen with adjacent bits using slightly different colours to help resolve individual bit values; here, the framebuffer address is shown (0x01fb5000), but other kinds of values can be shown, potentially many at a time

This now led to a long and frustrating process of detective work. With a means of at least getting the code to report its status, I had a chance of figuring out what might be wrong, but I also needed to draw on experience and ideas about likely causes. I started to draw up a long list of candidates, suggesting and eliminating things that could have been problems that weren’t. Any relief that a given thing was not the cause of the problem was tempered by the realisation that something else, possibly something obscure or beyond the limit of my own experiences, might be to blame. It was only some consolation that the instruction provoking the failure involved my nemesis from my earlier experiments: the “error level” (ERL) flag in the processor’s status register.

Porting L4Re and Fiasco.OC to the Ben NanoNote (Part 4)

March 24th, 2018

As described previously, having hopefully done enough to modify the kernel – Fiasco.OC – for the Ben NanoNote, it then became necessary to investigate the bootstrap package that is responsible for setting up the hardware and starting the kernel. This package resides in the L4Re distribution, which is technically a separate thing, even though both L4Re and Fiasco.OC reside in the same published repository structure.

Before continuing into the details, it is worth noting which things need to be retrieved from the L4Re section of the repository in order to avoid frustration later on with package dependencies. I had previously discovered that the following package installation operation would be required (from inside the l4 directory):

svn update pkg/acpica pkg/bootstrap pkg/cxx_thread pkg/drivers pkg/drivers-frst pkg/examples \

pkg/fb-drv pkg/hello pkg/input pkg/io pkg/l4re-core pkg/libedid pkg/libevent \

pkg/libgomp pkg/libirq pkg/libvcpu pkg/loader pkg/log pkg/mag pkg/mag-gfx pkg/x86emu

With the listed packages available, it should be possible to build the examples that will eventually interest us. Some of these appear superfluous – x86emu, for instance – but some of the more obviously-essential packages have dependencies on these other packages, and so we cannot rely on our intuition alone!

Also needed when building a payload is some path definitions in the l4/conf/Makeconf.boot file. Here is what I used:

MODULE_SEARCH_PATH += $(L4DIR_ABS)/../kernel/fiasco/mybuild MODULE_SEARCH_PATH += $(L4DIR_ABS)/conf/examples MODULE_SEARCH_PATH += $(L4DIR_ABS)/pkg/io/io/config BOOTSTRAP_SEARCH_PATH = $(L4DIR_ABS)/conf/examples BOOTSTRAP_SEARCH_PATH += $(L4DIR_ABS)/../kernel/fiasco/mybuild BOOTSTRAP_SEARCH_PATH += $(L4DIR_ABS)/pkg/io/io/config BOOTSTRAP_MODULES_LIST = $(L4DIR_ABS)/conf/modules.list

This assumes that the build directory used when building the kernel is called mybuild. The Makefile will try and copy the kernel into the final image to be deployed and so needs to know where to find it.

Describing the Ben (Again)

Just as we saw with the kernel, there is a need to describe the Ben and to audit the code to make sure that it stands a chance of working on the Ben. This is done slightly differently in L4Re but the general form of the activity is similar, defining the following:

- An architecture version (MIPS32r1) for the JZ4720 (in l4/mk/arch/Kconfig.mips.inc)

- A platform configuration for the Ben (in l4/mk/platforms)

- Some platform details in the bootstrap package (in l4/pkg/bootstrap/server/src)

- Some hardware details related to memory and interrupts (in l4/pkg/io/io/config/plat-qi_lb60)

For the first of these, I introduced a configuration setting (CPU_MIPS_32R1) to allow us to distinguish between the Ben’s SoC (JZ4720) and other processors, just as I did in the kernel code. With this done, the familiar task of hunting down problematic assembly language instructions can begin, and these can be divided into the following categories:

- Those that can be rewritten using other instructions that are available to us

- Those that must be “trapped” and handled by the kernel

Candidates for the former category include all unprivileged instructions that the JZ4720 doesn’t support, such as ext and ins. Where privileged instructions or ones that “bridge” privileges in some way are used, we can still rewrite them if they appear in the bootstrap code, since this code is also running in privileged mode. Here is an example of such privileged instruction rewriting (from l4/pkg/bootstrap/server/src/ARCH-mips/crt0.S):

#if defined(CONFIG_CPU_MIPS_32R1) cache 0x01, 0($a0) # Index_Writeback_Inv_D nop cache 0x08, 0($a0) # Index_Store_Tag_I #else synci 0($a0) #endif

Candidates for the latter category include all awkward privileged or privilege-escalating instructions outside the bootstrap package. Fortunately, though, we don’t need to worry about them very much at all. Since the kernel will be obliged to trap them, we can just keep them where they are and concede that there is nothing else we can do with them.

However, there is one pitfall: these preserved-but-unsupported instructions will upset the compiler! Consider the use of the now overly-familiar rdhwr instruction. If it is mentioned in an assembly language statement, the compiler will notice that amongst its clean MIPS32r1-compliant output, something is inserting an unrecognised instruction, yielding that error we saw earlier:

Error: opcode not supported on this processor: mips32 (mips32)

But we do know what we’re doing! So how can we persuade the compiler? The solution is to override what the compiler (or assembler) thinks it should be producing by introducing a suitable directive as in the following example (from l4/pkg/l4re-core/l4sys/include/ARCH-mips/cache.h):

asm volatile ( ".set push\n" ".set mips32r2\n" "rdhwr %0, $1\n" ".set pop" : "=r"(step));

Here, with the .set directives, we switch this little region of code to MIPS32r2 compliance and emit our forbidden instruction into the output. Since the kernel will take care of it in the end, the compiler shouldn’t be made to feel that it has to defend us against it.

In L4Re, there are also issues experienced with the CI20 that will also affect the Ben, such as an awkward and seemingly compiler-related issue affecting the way programs are started. In this regard, I just kept my existing patches for these things applied.

My other platform-related adjustments for the Ben have mostly borrowed from support for the CI20 where it existed. For instance, the bootstrap package’s definition for the Ben (in l4/pkg/bootstrap/server/src/platform/qi_lb60.cc) just takes the CI20 equivalent, eliminates superfluous features, modifies details that are different between the SoCs, and changes identifiers. The general definition for the Ben (in l4/mk/platforms/qi_lb60.conf) merely acknowledges differences in some basic platform details.

The CI20 was not supported with a hardware definition describing memory regions and interrupts used by the io package. Taking other devices as inspiration, I consulted the device documentation and wrote a definition when experimenting with the CI20. For the Ben, the form of this definition (in l4/pkg/io/io/config/plat-qi_lb60/hw_devices.io) remains similar but is obviously adjusted for the SoC differences.

Device Drivers and Output

One topic that I have not really mentioned at all is that pertaining to device drivers. I would not have even started this work if I didn’t feel there was a chance of seeing some signs of success from the Ben. Although the Ben, like the CI20, has the capability of exposing a serial console to the outside world, meaning that it can emit messages via a cable to another computer and receive input from that computer, unlike the CI20, its serial console pins are not particularly convenient to use: they really require wires to be soldered to some tiny pads that are found in the battery compartment of the device.

Now, my soldering skills are not very good, and I also want to be able to put the battery back into the device in future. I did try and experiment by holding wires against the pads, this working once or twice by showing output when booting the Ben into its more typical Linux-based environment. But such experiments proved to be unsustainable and rather uncomfortable, needing some kind of “guitar grip” while juggling cables and holding down buttons. So I quickly knew that I would need to get output from the Ben in other ways.

Having deployed low-level payloads to the Ben before, I knew something about the framebuffer, so I had some confidence about initialising it and getting something on the screen that might tell me what has or hasn’t happened. And I adapted my code from this previous effort, itself being derived from driver code written by the people responsible for the Ben, wrapping it up for L4Re. I tried to keep this code minimally different from its previous incarnation, meaning that I could eliminate certain kinds of mistakes in case the code didn’t manage to do its job. With this in place, I felt that I could now consider trying out my efforts and seeing what, if anything, might happen.

Attempting to Bootstrap

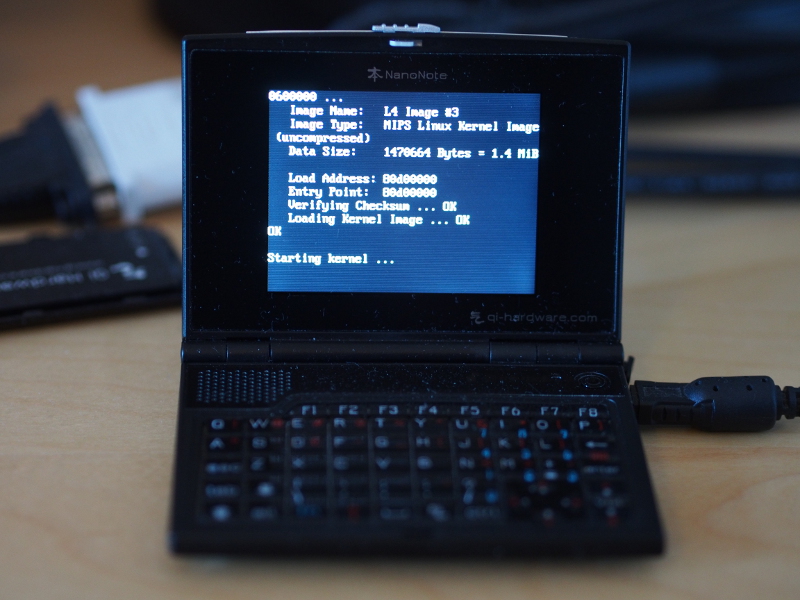

Being in the now-familiar position of believing that enough has been done to make the software run, I now considered an attempt at actually bootstrapping the kernel. It may sound naive, but I almost expected to be able to compile everything – the kernel, L4Re, my drivers – and for them all to work together in harmony and produce at least something on the display. But instead, after “Starting kernel …”, nothing happened.

It should be said that in these kinds of exercises, just one source of failure need present itself and the outcome is, of course, failure. And I can confirm that there were many sources of failure at this point. The challenges, then, are to identify all of these and then to eliminate them all. But how can you even know what all of these sources of failure actually are? It seemed disheartening, but then there are two kinds of strategy that can be employed: to investigate areas likely to be causing problems, and to take every opportunity to persuade the device to tell us what is happening. And with this, the debugging would begin.

Porting L4Re and Fiasco.OC to the Ben NanoNote (Part 3)

March 23rd, 2018

So far, in this exercise of porting L4Re and Fiasco.OC to the Ben NanoNote, we have toured certain parts of the kernel, made adjustments for the compiler to generate suitable code, and added some descriptions of the device itself. But, as we saw, the Ben needs some additional changes to be made to the software in places where certain instructions are used that it doesn’t support. Attempting to compile the kernel will most likely end with an error if we ignore such matters, because although the C and C++ code will produce acceptable instructions, upon encountering an assembly language statement containing an unacceptable instruction, the compiler will probably report something like this:

Error: opcode not supported on this processor: mips32 (mips32)

So, we find ourselves in a situation where the compiler is doing the right thing for the code it is generating, but it also notices when the programmer has chosen to do what is now the wrong thing. We must therefore track down these instructions and offer a supported alternative. Previously, we introduced a special configuration setting that might be used to indicate to the compiler when to choose these alternative sequences of instructions: CPU_MIPS32_R1. This gets expanded to CONFIG_CPU_MIPS32_R1 by the build system and it is this identifier that gets used in the program code.

Those Unsupported Instructions

I have put off giving out the details so far, but now is as good a time as any to provide some about the instructions that the JZ4720 (the SoC in the Ben NanoNote) doesn’t seem to support. Some of them are just conveniences, offering a single instruction where many would otherwise be needed. Others offer functionality that is not always trivially replicated.

| Instructions | Description | Privileges |

|---|---|---|

| di, ei | Disable, enable interrupts | Privileged |

| ext | Extract bits from register | Unprivileged |

| ins | Insert bits into register | Unprivileged |

| rdhwr | Read hardware register | Unprivileged, accesses privileged information |

| synci | Synchronise instruction cache | Unprivileged, performs privileged operations |

We have already mentioned rdhwr, and this is precisely the kind of instruction that can pose problems, these mostly being concerned with it offering access to some (supposedly) privileged information from an unprivileged processor mode. However, since the kernel runs in a privileged mode, typically referred to as “kernel mode”, we won’t see rdhwr when doing our modifications to the kernel. And since the need to provide rdhwr also applied to the JZ4780 (the SoC in the MIPS Creator CI20), it turned out that I didn’t need to do much in addition to what others had already done in supporting it.

Another instruction that requires a bridging of privilege levels is synci. If we discover synci being used in the kernel, it is possible to rewrite it in terms of the equivalent cache instructions. However, outside the kernel in unprivileged mode, those cache instructions cannot be used and we would not wish to support them either, because “user mode” programs are not meant to be playing around with such aspects of the hardware. The solution for such situations is to “trap” synci when it gets used in unprivileged code and to handle it using the same mechanism as that employed to handle rdhwr: to treat it as a “reserved instruction”.

Thus, some extra code is added in the kernel to support this “trap” mechanism, but where we can just replace the instructions, we do so as in this example (from kernel/fiasco/src/kern/mips/alternatives.cpp):

#ifdef CONFIG_CPU_MIPS32_R1

asm volatile ("cache 0x01, %0\n"

"nop\n"

"cache 0x08, %0"

: : "R"(orig_insn[i]));

#else

asm volatile ("synci %0" : : "R"(orig_insn[i]));

#endif

We could choose not to bother doing this even in the kernel, instead just trapping all usage of synci. But this would have a performance impact, and L4 is ostensibly very much about performance, and so the opportunity is taken to maximise it by going round and fixing up the code in all these places instead. (Note that I’ve used the nop instruction above, but maybe I should use ehb. It’s probably something to take another look at, perhaps more generally with regard to which instruction I use in these situations.)

The other unsupported instructions don’t create as many problems. The di (disable interrupts) and ei (enable interrupts) instructions are really shorthand for modifications to the processor’s status register, albeit performing those modifications “atomically”. In principle, in cases where I have written out the equivalent sequence of instructions but not done anything to “guard” these instructions from untimely interruptions or exceptions, something bad could happen that wouldn’t have happened with the di or ei instructions themselves.

Maybe I will revisit this, too, and see what the risks might actually be, but for the purposes of getting the kernel working – which is where these instructions appear – the minimal solution seemed reasonably adequate. Here is an extract from a statement employing the ei instruction (from kernel/fiasco/src/drivers/mips/processor-mips.cpp):

#ifdef CONFIG_CPU_MIPS32_R1 ASM_MFC0 " $t0, $12\n" "ehb\n" "or $t0, $t0, %[ie]\n" ASM_MTC0 " $t0, $12\n" #else "ei\n" #endif

Meanwhile, the ext (extract) and ins (insert) instructions have similar properties in that they too access parts of registers, replacing sequences of instructions that do the work piece by piece. One challenge that they pose is that they appear in potentially many different places, some with minimal register use, and the equivalent instruction sequence may end up needing an extra register to get the same work done. Fortunately, though, those equivalent instructions are all perfectly usable at whichever privilege level happens to be involved. Here is an extract from a statement employing the ins instruction (from kernel/fiasco/src/kern/mips/thread-mips.cpp):

#ifdef CONFIG_CPU_MIPS32_R1 " andi $t0, %[status], 0xff \n" " li $t1, 0xffffff00 \n" " and $t2, $t2, $t1 \n" " or $t2, $t2, $t0 \n" #else " ins $t2, %[status], 0, 8 \n" #endif

Note how temporary registers are employed to isolate the bits from the status register and to erase bits in the $t2 register before these two things are combined and stored in $t2.

Bridging the Privilege Gap

The rdhwr instruction has been mentioned quite a few times already. In the kernel, it is handled in the kernel/fiasco/src/kern/mips/exception.S file, specifically in the routine called “reserved_insn”. When the processor encounters an instruction it doesn’t understand, the kernel should have been configured to send it here. I will admit that I knew little to nothing about what to do to handle such situations, but the people who did the MIPS port of the kernel had laid the foundations by supporting one rdhwr variant, and I adapted their work to handle another.

In essence, what happens is that the processor “shows up” in the reserved_insn routine with the location of the bad instruction in its “exception program counter” register. By loading the value stored at that location, we obtain the instruction – or its value, at least – and can then inspect this value to see if we recognise it and can do anything with it. Here is the general representation of rdhwr with an example of its use:

| SPECIAL3 | _____ | t | s | _____ | RDHWR |

|---|---|---|---|---|---|

| 011111 | 00000 | 01000 | 00001 | 00000 | 111011 |

The first and last portions of the above representation identify the instruction in general, with the bits for the second and next-to-last portions being set to zero presumably because they are either not needed to encode an instruction in this category, or they encode two parameters that are not needed by this particular instruction. To be honest, I haven’t checked which explanation applies, but I suspect it is the latter.

This leaves the remaining portions to indicate specific registers: the target (t) and source (s). With t=8, the result is written to register $8, which is normally known as $t0 (or just t0) in MIPS assembly language. Meanwhile, with s=1, the source register has been given as $1, which is the SYNCI_Step hardware register. So, the above is equivalent to the following:

rdhwr $t0, $1

To reproduce this same realisation in code, we must isolate the parts of the value that identify the instruction. For rdhwr accessing the SYNCI_Step hardware register, this means using a mask that preserves the SPECIAL3, RDHWR, s and blank regions, ignoring the target register value t because it will change according to specific circumstances. Applying this mask to the instruction value and comparing it to an expected value is done rather like this:

li $k0, 0x7c00083b # $k0 = SPECIAL3, blank, s=1, blank, RDHWR li $at, 0xffe0ffff # $at = define a mask to mask out t and $at, $at, $k1 # $at = the mask applied to the instruction value

Now, if $at is equal to $k0, the instruction value is identified as encoding rdhwr accessing SYNCI_Step, with the target register being masked out so as not to confuse things. Later on, the target register is itself selected and some trickery is employed to get the appropriate data into that register before returning from this routine.

For the above case and for the synci instruction, the work that needs doing once such an instruction has been identified is equivalent to what would have happened had it been possible to just insert into the code the alternative sequence of instructions that achieves the same thing. So, for synci, the equivalent cache instructions are executed before control is returned to the instruction after synci in the program where it appeared. Thus, upon encountering an unsupported instruction, control is handed over from an unprivileged program to the kernel, the instruction is identified and handled using the necessary privileged instructions, and then control is handed back to the unprivileged program again.

In fact, most of my efforts in exception.S were not really directed towards these two awkward instructions. Instead I had to deal with the use of quite a number of ext and ins instructions. Although it seems tempting to just trap those as well and to provide handlers for them, that would add considerable overhead, and so I added some macros to provide the same functionality when building the kernel for the Ben.

Prepare for Launch

Looking at my patches for the kernel now, I can see that there isn’t much else to cover. One or two details are rather important in the context of the Ben and how it manages to boot, however, and the process of figuring out those details was, like much else in this exercise, time-consuming, slightly frustrating, and left surprisingly little trace once the solution was found. At this stage, not everything was perfectly transcribed or expressed, leaving a degree of debugging activity that would also need to be performed in the future.

So, with a kernel that might be runnable, I considered what it would take to actually launch that kernel. This led me into the L4 Runtime Environment (L4Re) code and specifically to the bootstrap package. It turns out that the kernel distribution delegates such concerns to other software, and the bootstrap package sits uneasily alongside other packages, it being perhaps the only one amongst them that can exercise as much privilege as the kernel because its code actually runs at boot time before the kernel is started up.

Porting L4Re and Fiasco.OC to the Ben NanoNote (Part 2)

March 22nd, 2018

Having undertaken some initial investigations into running L4Re and Fiasco.OC on the MIPS Creator CI20, I envisaged attempting to get this software running on the Ben NanoNote, too. For a while, I put this off, feeling confident that when I finally got round to it, it would probably be a matter of just choosing the right compiler options and then merely fixing all the mistakes I had made in my own driver code. Little did I know that even the most trivial activities would prove more complicated than anticipated.

As you may recall, I had noted that a potentially viable approach to porting the software would merely involve setting the appropriate compiler switches for “soft-float” code, thus avoiding the generation of floating point instructions that the JZ4720 – the SoC on the Ben NanoNote – would not be able to execute. A quick check of the GCC documentation indicated the availability of the -msoft-float switch. And since I have a working cross-compiler for MIPS as provided by Debian, there didn’t seem to be much more to it than that. Until I discovered that the compiler doesn’t seem to support soft-float output at all.

I had hoped to avoid building my own cross-compiler, and apart from enthusiastic (and occasionally successful) attempts to build the Debian ones before they became more generally available, the last time I really had anything to do with this was when I first developed software for the Ben. As part of the general support for the device an OpenWrt distribution had been made available. Part of that was the recipe for building the cross-compiler and other tools, needed for building a kernel and all the software one would deploy on a device. I am sure that this would still be a good place to look for a solution, but I had heard things about Buildroot and so set off to investigate that instead.

So although Buildroot, like OpenWrt, is promoted as a way of building an entire system, it too offers help in building just the toolchain if that is all you need. Getting it to build the appropriately-configured cross-compiler is a matter of the familiar “make menuconfig” seen from the Linux kernel source distribution, choosing things in a menu – for us, asking for a soft-float toolchain, also enabling C++ support – and then running “make toolchain”. As a result, I got a range of tools in the output/host/bin directory prefixed with mipsel-buildroot-linux-uclibc.

Some Assembly Required

Changing the compiler settings for Fiasco.OC (in kernel/fiasco/src/Makeconf.mips) and L4Re (in l4/mk/arch/Makeconf.mips), and making sure not to enable any floating point support in Fiasco.OC, and recompiling the code to produce soft-float output was straightforward enough. However, despite the portability of this software, it isn’t completely C and C++ code: lurking in various places (typically in mips or ARCH-mips directories) are assembly language source files with the .S prefix, and in some C and C++ files one can also find “asm” statements which embed assembly language instructions within higher-level code.

With the assumption that by specifying the right compiler switches, no floating point instructions will be produced from C or C++ source code, all that remains is to determine whether any of these other code sections mention such forbidden instructions. It was asserted that Fiasco.OC doesn’t use any floating point instructions at all. Meanwhile, I couldn’t find any floating point instructions in the generated code: “mipsel-linux-gnu-objdump -D some-output-file” (or, indeed, “mipsel-buildroot-linux-uclibc-objdump -D some-output-file”) now started to become a familiar acquaintance if not exactly a friend!

In fact, the assembly language files and statements would provide other challenges in the form of instructions unsupported by the JZ4720. Again, I had the choice of either trying to support MIPS32r2 instructions, like rdhwr, by providing “reserved instruction” handlers, or to rewrite these instructions in forms suitable for the JZ4720. At least within Fiasco.OC – the “kernel” – where the environment for executing instructions is generally privileged, it is possible to reformulate MIPS32r2 instructions in terms of others. I will return to the details of these instructions later on.

Where to Find Things

Having spent all this time looking around in the L4Re and Fiasco.OC code, it is perhaps worth briefly mentioning where certain things can be found. The heart of the action in the kernel is found in these places:

| Directory | Significance |

|---|---|

| kernel/fiasco/src | The top-level directory of the kernel sources, having some MIPS-specific files |

| kernel/fiasco/src/drivers/mips | Various hardware abstractions related to MIPS |

| kernel/fiasco/src/jdb/mips | MIPS-specific support code for the kernel debugger (which I don’t use) |

| kernel/fiasco/src/kern/mips | MIPS-specific support code for the kernel itself |

| kernel/fiasco/src/templates | Device configuration details |

As noted above, I don’t use the kernel debugger, but I still made some edits that might make it possible to use it later on. For the most part, the bulk of my time and effort was spent in the src/kern/mips hierarchy, occasionally discovering things in src/drivers/mips that also needed some attention.

Describing the Ben

So it started to make sense to consider how the Ben might be described in terms of a kernel configuration, and whether we might want to indicate a less sophisticated revision of the architecture so that we could test for it in the code and offer alternative sequences of instructions where possible. There are a few different places where hardware platforms are described within Fiasco.OC, and I ended up defining the following:

- An architecture version (MIPS32r1) for the JZ4720 (in kernel/fiasco/src/kern/mips/Kconfig)

- A definition for the Ben itself (in kernel/fiasco/src/templates/globalconfig.out.mips-qi_lb60)

- A board entry for the Ben (in kernel/fiasco/src/kern/mips/bsp/qi_lb60/Kconfig) as part of a board-specific collection of functionality

This is not by any means enough, even disregarding any code required to do things specific to the Ben. But with the additional configuration setting for the JZ4720, which I called CPU_MIPS32_R1, it becomes possible to go around inside the kernel code and start to mark up places which need different instruction sequences for the Ben, using CONFIG_CPU_MIPS32_R1 as the symbol corresponding to this setting in the code itself. There are places where this new setting will also change the compiler’s behaviour: in kernel/fiasco/src/Makeconf.mips, the -march=mips32 compiler switch is activated by the setting, preventing the compiler from generating instructions we do not want.

For the board-specific functionality (found in kernel/fiasco/src/kern/mips/bsp/qi_lb60), I took the CI20’s collection of files as a starting point. Fortunately for me, the Ben’s JZ4720 and the CI20’s JZ4780 are so similar that I could, with reference to Linux kernel code and other sources of documentation, make a first effort at support for the Ben by transcribing and editing these files. Some things I didn’t understand straight away, and I only later discovered what some parameters to certain methods really mean.

But generally, this work was simply a matter of seeing what peripheral registers were mentioned in the CI20 version, figuring out whether those registers were present in the earlier SoC, and determining whether their locations were the same or whether they had been moved around from one product to the next. Let us take a brief look at the registers associated with the timer/counter unit (TCU) in the JZ4720 and JZ4780 (with apologies for WordPress converting “x” into a multiplication symbol in some places):

| JZ4720 (Ben NanoNote) | JZ4780 (MIPS Creator CI20) | ||||

|---|---|---|---|---|---|

| Registers | Offsets | Size | Registers | Offsets | Size |

| TER, TESR, TECR (timer enable, set, clear) | 0x10, 0x14, 0x18 | 8-bit | TER, TESR, TECR (timer enable, set, clear) | 0x10, 0x14, 0x18 | 16-bit |

| TFR, TFSR, TFCR (timer flag, set, clear) | 0x20, 0x24, 0x28 | 32-bit | TFR, TFSR, TFCR (timer flags, set, clear) | 0x20, 0x24, 0x28 | 32-bit |

| TMR, TMSR, TMCR (timer mask, set, clear) | 0x30, 0x34, 0x38 | 32-bit | TMR, TMSR, TMCR (timer mask, set, clear) | 0x30, 0x34, 0x38 | 32-bit |

| TDFR0, TDHR0, TCNT0, TCSR0 (timer data full match, half match, counter, control) | 0x40, 0x44, 0x48, 0x4c | 16-bit | TDFR0, TDHR0, TCNT0, TCSR0 (timer data full match, half match, counter, control) | 0x40, 0x44, 0x48, 0x4c | 16-bit |

| TSR, TSSR, TSCR (timer stop, set, clear) | 0x1c, 0x2c, 0x3c | 8-bit | TSR, TSSR, TSCR (timer stop, set, clear) | 0x1c, 0x2c, 0x3c | 32-bit |

We can see how the later product (JZ4780) has evolved from the earlier one (JZ4720), with some registers supporting more bits, exposing control over an increased number of timers. A lot of the details are the same, which was fortunate for me! Even the oddly-located timer stop registers, separated by intervals of 16 bytes (0x10) instead of 4 bytes, have been preserved between the products.

One interesting difference is the absence of the “operating system timer” in the JZ4720. This is a 64-bit counter provided by the JZ4780, but for the Ben it seems that we have to make do with the standard 16-bit timers provided by both products. Otherwise, for this part of the hardware, it is a matter of making sure the fundamental operations look reasonable – whether the registers are initialised sensibly – and then seeing how this functionality is used elsewhere. A file called tcu_jz4740.cpp in the board-specific directory for the Ben preserves this information. (Note that the JZ4720 is largely the same as the JZ4740 which can be considered as a broader product category that includes the JZ4720 as a variant with slightly reduced functionality.)

In the same directory, there is a file covering timer functionality from the perspective of the kernel: timer-jz4740.cpp. Here, the above registers are manipulated to realise certain operations – enabling and disabling timers, reading them, indicating which interrupt they may cause – and the essence of this work again involves checking documentation sources, register layouts, and making sure that the intent of the code is preserved. It may be mundane work, but any little detail that is not correct may prevent the kernel from working.

Covering the Ground

At this point, the essential hardware has mostly been described, building on all the work done by others to port the kernel to the MIPS architecture and to the CI20, merely adding a description of the differences presented by the Ben. When I made these changes, I was slowly immersing myself in the code, writing things that I felt I mostly understood from having previously seen code accessing certain hardware features of the Ben. But I knew that there will still some way to go before being able to expect anything to actually work.

From this point, I would now need to confront the unimplemented instructions, deal with the memory layout, and figure out how the kernel actually gets launched in the first place. This would also mean that I could no longer keep just adding and changing code and feeling like progress was being made: I would actually have to try and get the Ben to run something. And as those of us who write software know very well, there can be nothing more punishing than being confronted with the behaviour of a program that is incorrect, with the computer caring not about intentions or aspirations but only about executing the logic whether it is correct or not.

Porting L4Re and Fiasco.OC to the Ben NanoNote (Part 1)

March 21st, 2018

For quite some time, I have been interested in alternative operating system technologies, particularly kernels beyond the likes of Linux. Things like the Hurd and technologies associated with it, such as Mach, seem like worthy initiatives, and contrary to largely ignorant and conveniently propagated myths, they are available and usable today for anyone bothered to take a look. Indeed, Mach has had quite an active life despite being denigrated for being an older-generation microkernel with questionable performance credentials.

But one technological branch that has intrigued me for a while has been the L4 family of microkernels. Starting out with the motivation to improve microkernel performance, particularly with regard to interprocess communication, different “flavours” of L4 have seen widespread use and, like Mach, have been ported to different hardware architectures. One of these L4 implementations, Fiasco.OC, appeared particularly interesting in this latter regard, in addition to various other features it offers over earlier L4 implementations.

Meanwhile, I have had some success with software and hardware experiments with the Ben NanoNote. As you may know or remember, the Ben NanoNote is a “palmtop” computer based on an existing design (apparently for a pocket dictionary product) that was intended to offer a portable computing experience supported entirely by Free Software, not needing any proprietary drivers or firmware whatsoever. Had the Free Software Foundation been certifying devices at the time of its introduction, I imagine that it would have received the “Respects Your Freedom” certification. So, it seems to me that it is a worthy candidate for a Free Software porting exercise.

The Starting Point

Now, it so happened that Fiasco.OC received some attention with regards to being able to run on the MIPS architecture. The Ben NanoNote employs a system-on-a-chip (SoC) whose own architecture closely (and deliberately) resembles the MIPS architecture, but all information about the JZ4720 SoC specifies “XBurst” as the architecture name. In fact, one can regard XBurst as a clone of a particular version of the MIPS architecture with some additional instructions.

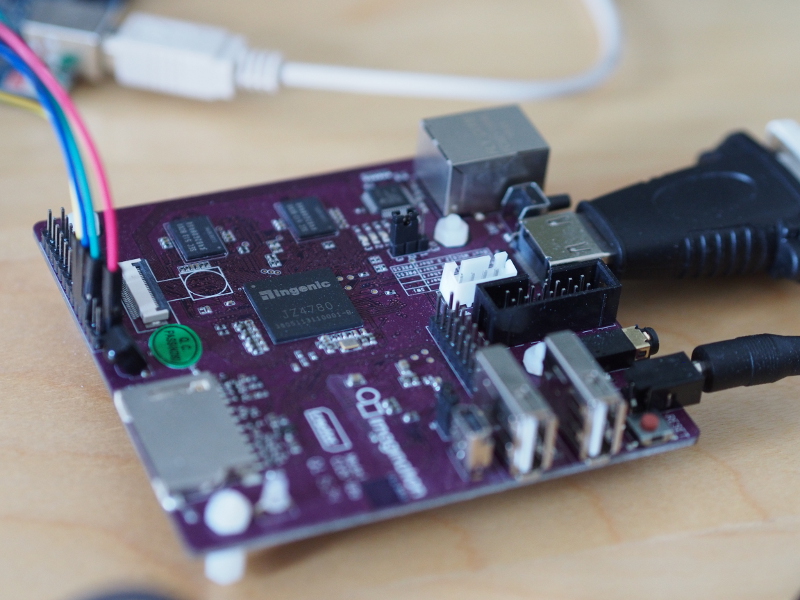

Indeed, the vendor, Ingenic, subsequently licensed the MIPS architecture, produced some SoCs that are officially MIPS-labelled, culminating in the production of the MIPS Creator CI20 product: a development board commissioned by the then-owners of the MIPS portfolio, Imagination Technologies, utilising the Ingenic JZ4780 SoC to presumably showcase the suitability of the MIPS architecture for various applications. It was apparently for this product that an effort was made to port Fiasco.OC to MIPS, and it was this effort that managed to attract my attention.

It was just as well others had done this hard work. Although I have been gradually immersing myself in the details of how MIPS-based CPUs function, having written some code that can boot the Ben, run a few things concurrently, map memory for different processes, read the keyboard and show things on the screen, I doubt that my knowledge is anywhere near comprehensive enough to tackle porting an existing operating system kernel. But knowing that not only had others done this work, but they had also targeted a rather similar system, gave me some confidence that I might be able to perform the relatively minor porting exercise to target the Ben.

But first I felt that I had to gain experience with Fiasco.OC on MIPS in a more convenient fashion. Although I had muddled through the development of code on the Ben, reusing existing framebuffer driver code and hacking away until I managed to get some output on the display, I felt that if I were to continue my experiments, a more efficient way of debugging my code would be required. With this in mind, I purchased a MIPS Creator CI20 and, after doing things with the pre-installed Debian image plus installing a newer version of Debian, I set out to try Fiasco.OC on the hardware.

The Missing Pieces

According to the Fiasco.OC features page, the “Ci20” is supported. Unfortunately, this assertion of support is not entirely true, as we will come to see. Previously, I mentioned that the JZ4720 in the Ben NanoNote largely implements the instructions of a certain version of the MIPS architecture. Although the JZ4780 in the CI20 introduces some new features over the JZ4720, such as a floating point arithmetic unit, it still lacks various instructions that are present in commonly-used MIPS versions that might be taken as the “baseline” for software support: MIPS32 Release 2 (MIPS32r2), for instance.

Upon trying to get Fiasco.OC to start up, I soon encountered one of these instructions, or at least a particular variant of it: rdhwr (read hardware register) accessing SYNCI_Step (the instruction cache line size). This sounds quite fearsome, but I had been somewhat exposed to cache management operations when conjuring up my own code to run on the Ben. In fact, all this instruction variant does is to ask how big the step size has to be in a loop that invalidates the instruction cache, instead of stuffing such a value into the program when compiling it and thus making an executable that will then be specific to a particular processor.

Fortunately, those hardworking people who had already ported the code to MIPS had previously encountered another rdhwr variant and had written code to “trap” it in the “reserved instruction” handler. That provided some essential familiarisation with the kernel code, saving me the effort of having to identify the right place to modify, as well as providing a template for how such handlers should operate. I feel fairly competent writing MIPS assembly language, although I would manage to make an easy mistake in this code that would impede progress much later on.

There were one or two other things that also needed fixing up, mentioned briefly in my review of the year article, generally involving position-independent code that was not called correctly and may have been related to me using a generic version of GCC instead of some vendor-modified version. But as I described in that article, I finally managed to boot Fiasco.OC and run a program on top of it, writing the output via the serial connection to my personal computer.

The End of the Very Beginning

I realised that compiling such code for the Ben would either require the complete avoidance of floating point instructions, due to the lack of that floating point unit in the JZ4720, or that I would need to provide implementations of those instructions in software. Fortunately, GCC provides a mode to compile “soft-float” versions of C and C++ programs, and so this looked like the next step. And so, apart from polishing support for features of the Ben like the framebuffer, input/output pins, the clock circuitry, it didn’t really seem that there would be so much to do.

As it so often turns out with technology, optimism can lead to unrealistic estimates of how much time and effort remains in a project. I now know that a description of all this effort would be just too much for a single article. So, I will wrap this article up with a promise that the next one will descend into the details of compilers, assembly language, the SoC, and before too long, we will get to see the inconvenience of debugging low-level software with nothing more than a framebuffer.

The Noble Volunteer (Again)

March 11th, 2018

I saw that the usual refrain of “we’re all volunteers here” had another outing on a recent LWN article about the Python 2 to 3 transition, specifically referring to who it is that supposedly does all the core development work on CPython (as well as constantly changing what the Python language is meant to be). There are a few different observations to be made here, so let me establish three main topics:

- The funding of Python implementation development.

- The hiring of various Python core development contributors.

- Python and Free Software as a hobby or spare time effort.

I have written about how the Python Software Foundation raises and spends money before. For the most part, nothing has changed since then: the PSF appears to raise and then spend hundreds of thousands of dollars every year (apparently down from over $300000 in 2016 to under $250000 in 2017, though), directing this money mostly towards events and promotion. In fact, the largest contribution to core-related Python software development in 2017 was actually from the Mozilla Open Source Support programme, with a $170000 grant to fix up the Python Package Index infrastructure. So the PSF is clearly comfortable leaving it to others to fund the P in PSF.

Lots of people depend on the Python Package Index, but like with Free Software in general, the people making good money while leaning on these common, volunteer-run resources never seem to pitch in significantly themselves. It is true that the maintainer of this resource was allowed to work on it as his day job, but then got “downsized”, and now works in a role where he can work on it again but only as part of his day job. But I imagine that the people at Mozilla, some of whom have connections to the world of Python packaging, quite possibly relying on the package infrastructure to get their own stuff done, were getting fed up with “volunteers” as being the usual excuse for nothing getting done.

Now there certainly are Python core developers who are employed in work that influences CPython development or that has some connection to Python, perhaps related to other implementations of Python. Notably, Pyston and Pyjion were both developed by core developers working at Dropbox and Microsoft respectively. Famously, Guido van Rossum, Python’s originator, was hired by Google and then Dropbox, seemingly being able to dedicate some of his time on Python topics as part of his day job at both places. After all, it was during Van Rossum’s time at Google, accompanied by other Google-employed Python core contributors, that Python 3 started to take shape.

So it seems that some very large companies recognise the value that Python brings, they even hire influential people in the Python core development community, but maybe this does not translate to proper corporate support for Python core development. It could very well be the case that most of these people really do have to write Python code in their day jobs but cannot direct much or any time towards developing Python – the implementations or the language – in their working hours. They would be volunteers in their own time, albeit volunteers facilitated by their employment, having the stability of a relatively well-paid job and the good fortune of having Python core development as a productive and hopefully rewarding hobby.

Maybe it suits everyone being paid as a result of their reputation in the Python community to indulge in core development as a hobby. But what about everyone else? All those other volunteers who are doing the donkey work of testing and fixing the code when it stops working for them, implementing things that others have deemed a good idea, making Python 3 a reality, or whatever? Well, I suppose they get “pizza and beer soda” paid for by the PSF at their sprints.

In certain circles, it seems that a lot of effort is spent promoting a lifestyle that involves feel-good “volunteerism” and getting your name known through selfless volunteering. If you are one of those “other” volunteers, maybe the ultimate goal is to have the senior hobbyists in the community recommending you to their employers, which would explain how Python core developers seem to cluster in various companies. Maybe this is the new “open source” dream: not actually being paid to work on Free Software but merely pursuing it as a hobby, dependent on an employer for the lifestyle but not influenced by them, at least not conspicuously, retaining the ability to play the volunteer card.

And this leads me to a more general observation that came to mind when reading a remark by someone trying to establish a viable enterprise, all for the benefit of Free Software and open hardware. It was about how he was on the ground, doing all the legwork, opening up new opportunities the hard way while people in their comfortable jobs let him get on with it, throwing pennies his way and waiting for their substantial but cheaply-acquired rewards. Now, in that particular instance my sympathy is muted, for various reasons that hopefully do not need a public airing, but I see the point being made and, once you are aware of it, it is an annoyingly familiar one.

You will often see people inviting others to contribute to their projects, writing things like “how about someone fix this, make this better, implement this, do this?” It sounds so constructive, so worthy, like you can make a difference. In Norwegian, there’s even a word for the spirit of this kind of thing – “dugnad” – which is awkward to translate to English, but it effectively denotes an event or general activity where everyone pitches in collectively to get something done in a way that is relatively painless for each participant. Being a cynic, I would often translate “dugnad” as to be too cheap to pay to get something done properly.

What can be even more galling is that people “howabouting” potential contributors are not only comfortable hobbyists, but some of them also solicit donations for their hobby, not because they need the money but because it might cover a few beers or pizzas, some entertainment, or whatever. And so, a notion is cultivated that everything can be done by voluntary effort, that the value of such work is effectively “beer money”, and with the likes of the PSF not willing to put its own money the way of its own technology, people start to think that if “pizza and beer soda” is enough to improve a Free Software product, why would anyone want to pay people real money to improve it?

And so the notion of the volunteer, so noble and selfless, actually cheapens the value of the work that has to be done. Why bother paying for Free Software or for anyone to work on it when the noble volunteers will get it done? The answer, of course, is that people typically don’t and so the important things typically don’t get done, either. Still, at least the hobbyists get to have some fun.

A Timely Example

In another comment on the referenced article, discussing the general Python 3 strategy and whether anyone who had criticised it might have been worth listening to, it was noted that such critics might be like a “broken clock”: wrong most of the time but coincidentally right on certain occasions. I guess that for those who don’t like to hear criticism of the Python 3 masterplan, I could be one of those broken clocks, having criticised the introduction of Python 3. But if as the saying goes “a broken clock is right twice a day”, maybe some of my other criticisms are also worth taking a look at: one of them is probably good.

Of course, it hardly requires special predictive powers to note that people with large investments in existing code might not like being told that it is “good for them” to have to rewrite it all. And it is hardly a surprise that people have been motivated to look at other languages partly as a consequence of that, partly because of Python’s lack of direction or progress on other fronts, as language evolution dominates over all other concerns.

Spare a thought for Guido van Rossum whose colleagues, no matter where he works, always seem to end up writing software in Go instead of in the language that presumably got him through the door. Perhaps things wouldn’t have played out that way if those benefiting from Python had also properly invested in it, instead of leaving it for the hobbyists or using “we’re all volunteers” as an excuse for not keeping Python competitive with other emerging languages and technologies.

Some Updates

I was recently contacted by Sumana Harihareswara who asked for me to clarify that the proposal for improving the Python packaging infrastructure was initiated within the PSF’s Packaging Working Group, not by Mozilla, at least as far as available information would suggest. As someone involved with this working group, Sumana appears to be in a position to make claims about this more authoritatively than I can.

Meanwhile, an invitation to a PSF-related sprint that I happened to see today advertises “an amazing evening of coding, pizza and beer”. Having read a gushing endorsement of “dugnad” culture only recently – a classic promotional piece for readers outside Norway – I cannot help but observe that putting the burden for things onto the voluntary sector, so that the state can save money (to give as tax cuts to the wealthy) and so that the private sector can get something for nothing (to maximise shareholder returns), is rather a pervasive and not-so-noble phenomenon that will readily document itself to anyone paying enough attention.

Concise Attribute Initialisation in Lichen… and Python?

January 22nd, 2018

In my review of 2017, I mentioned a project of mine to make a Python-like language called Lichen that is more amenable to compile-time analysis than Python is, while still having a feature set I might actually be able to use in “real” programs one day. There are a lot of different “moving parts” in the Lichen toolchain, and being preoccupied with various other projects and activities, I haven’t been able to get back into working on it properly in the last few months.

Recently, as I found myself writing Python code for another of my projects, I got to wondering about something in Python that can occur a lot: the initialisation of instance attributes. Here is a classic example:

class Point:

def __init__(self, x, y):

self.x = x

self.y = y

# For illustration, here is how the class is used...

p = Point(640, 512)

print p.x, p.y # 640 512

In this example, having to assign the parameter values to the instance attributes is not much of a hardship. But with more verbose initialisation methods with more parameters and more attributes involved, writing everything out can be tiresome. Moreover, mistakes can be made, particularly if the interfaces and structures are evolving. Naturally, there are a range of improvements and measures that attempt to alleviate the problem. Here is the most obvious:

class Point:

def __init__(self, x, y):

self.x = x; self.y = y

This just puts the same statements on one line, so let us move beyond it to the next attempt:

class Point:

def __init__(self, x, y):

self.x, self.y = x, y

Here, we are actually performing “tuple assignment”, with the parameter values being placed in a tuple whose elements are then assigned to the names in the corresponding positions on the left-hand side of the assignment.

Now, without any Python “magic”, this is probably as far as you can get. The “magic” involves introspection and a feature known as “decorators” (which Lichen doesn’t support) to let us use something like this:

class Point:

@initialising("x", "y")

def __init__(self, x, y):

pass

Here, I am taking inspiration from a collection of actual suggestions and solutions, but none of them look like the above. Indeed, many of them take the approach of initialising attributes using every parameter in the method signature which isn’t always what you want, although it does seem to be requested every now and again.

Although the above example looks quite nice, the mechanism responsible for performing the attribute assignments will not look as nice, and so I won’t show it here. And unless a mode is supported where the names can be omitted, thus initialising attributes using all parameters (except self) when you do want to, it is perhaps tiresome to have to write the names out again somewhere else, even more so as strings.

You will also find people advocating more transparent use of the ** catch-all parameter (also not supported by Lichen), sometimes in response to people worried that writing out lots of assignments is a sign of bad code. This yields solutions like this one:

class Point:

def __init__(self, **kw):

for name in ("x", "y"):

setattr(self, name, kw.get(name))

But keeping named parameters in the signature helps to prevent certain kinds of errors, which is one reason why I don’t intend to support catch-all parameters in Lichen.

But what I wondered is why Python never supported something closer to C++’s initialisation lists. In C++, we might write the code somewhat as follows:

class Point

{

Number x, y;

public:

Point(Number x, Number y) : x(x), y(y) {};

}

Here, it is evident that repetition occurs just as in the “magic” Python example, which is something I might want to eliminate. Maybe we would want to have a shorthand for attribute initialisation within the parameter list itself. And then I thought of a possible syntax:

class Point:

def __init__(self, .x, .y):

pass

So, any parameter employing a dot before its name would result in the assignment of its value to the instance attribute having the same name. Of course, this wouldn’t support a parameter with one name having its value assigned to an attribute with another name, but I thought it best to stick to the simple cases. “Why not add this to Lichen?” I thought.

And in line with not getting too immersed in the toolchain straight away after such a long break, I decided on some rather simple semantics for this feature: dot-prefixed names would still exist as local names; dot-prefixing would just be a form of shorthand meaning that an assignment would be generated at the very start of the function body. So, the above would really translate to the very first example given at the start of this article or, indeed, the second one which is equivalent and is reproduced below:

# Lichen-only... # Python and Lichen...

class Point: class Point:

def __init__(self, .x, .y): def __init__(self, x, y):

pass self.x = x; self.y = y

Keeping the sophistication of the feature at an unambitious level, besides letting me slowly familiarise myself again with the code, also helps to deal with potential conflicts with other mechanisms. For example, what if someone wanted to employ a name twice – once dot-prefixed, once unprefixed – like this…?

class Point:

def __init__(self, .x, .y, x):

self.intensity = x ** 2

By asserting that the dot-prefixed x is really just x that also initialises the attribute of the same name, we can fall back on the normal rules around parameters and forbid such duplicate names without having to think very hard about temporary names or more exotic mechanisms that might be used to initialise attributes directly. One other thing worth mentioning is that I don’t reserve the use of such parameters for the exclusive use of initialiser methods, so other applications are possible. For example:

class Point:

def __init__(.x, .y): pass

def update(.x, .y): pass

Here, I also omit self because Lichen defines it as always being present in methods, anyway. And we could actually make the update method an alias of the initialiser method, too, but let us not get too carried away!

Fortunately, I adopted a parser framework in Lichen that was originally written for PyPy that allows relatively straightforward modification of the language grammar. Conveniently, the grammar changes required for this feature are minimal and I don’t even have to add any extra tokens. That made me wonder whether such a syntax had been suggested for Python at some point or other. Some quick searches haven’t yielded any results, and I can’t be bothered to trawl the different mailing list archives to find mentions of such features. I can easily imagine that such a feature might have been discussed rather early in Python’s lifetime, possibly in the mid-1990s.

Arguments for new syntax in Python are often met with arguments against “syntactic sugar”, with such “sugar” introducing more convenient notation or a form of shorthand for particular operations. Over the years, people have argued for more concise ways of referencing instance attributes and class attributes instead of using the almost-special self name (that is rather more special in Lichen). Compound assignments to instance attributes have probably been discussed, too, maybe proposing things like this:

# Compound assignment idea... # Equivalent assignment... self.(x, y) = x, y self.x, self.y = x, y

In response to such suggestions, people seem to be asked how often they need to write such things, whether it is really such a burden to do so, and whether their programming tools cannot help them write out the conventional assignments semi-automatically instead. Proposed general language constructs may well risk introducing conflicts with other language features in unanticipated ways, and if such constructs only ever get used in certain, rather limited, circumstances then one can justifiably ask whether it is really worth the effort to support them. They will, after all, need people to implement them, test them, maintain them, and keep fixing them long into the future.

As is evident from the discussion of the problem of concise initialisation, Python’s community has grown accustomed to solving simple problems in fairly complicated ways using general mechanisms introduced to support broad classes of functionality. Decorators were introduced into Python as a way of inserting extra code around methods and functions to modify or extend their behaviour, allowing people to tackle such problems by getting that extra code to initialise attributes or to do many other weird, wild and wonderful things. Providing such mechanisms lets the language designers send people elsewhere when those people descend on the designers demanding a quick syntactic fix for a specific problem they might be having.