An Absence of Strategy?

Wednesday, January 16th, 2019I keep starting articles but not finishing them. However, after responding to some correspondence recently, where I got into a minor rant about a particular topic, I thought about starting this article and more or less airing the rant for a wider audience. I don’t intend to be negative here, so even if this sounds like me having a moan about how things are, I really do want to see positive and constructive things happen to remedy what I see as deficiencies in the way people go about promoting and supporting Free Software.

The original topic of the correspondence was my brother’s article about submitting “apps” to F-Droid, the Free Software application repository for Android, which somehow got misattributed to me in the FSFE newsletter. As anyone who knows both of us can imagine, it is not particularly unusual that people mix us up, but it does still surprise me how people can be fluid about other people’s names and assume that two people with the same family name are the same person.

Eventually, the correction was made, for which I am grateful, and it must be said that I do also appreciate the effort that goes into writing the newsletter. Having previously had the task of doing some of the Fellowship interviews, I know that such things require more work than people might think, largely go either unnoticed or unremarked, and as a participant in the process it can be easy to wonder afterwards if it was worth the bother. I do actually follow the FSFE Planet and the discussion mailing list, so I’d like to think that I keep up with what other people do, but the newsletter must have some value to those who don’t want to follow a range of channels.

A Rant about Free Software on Mobile

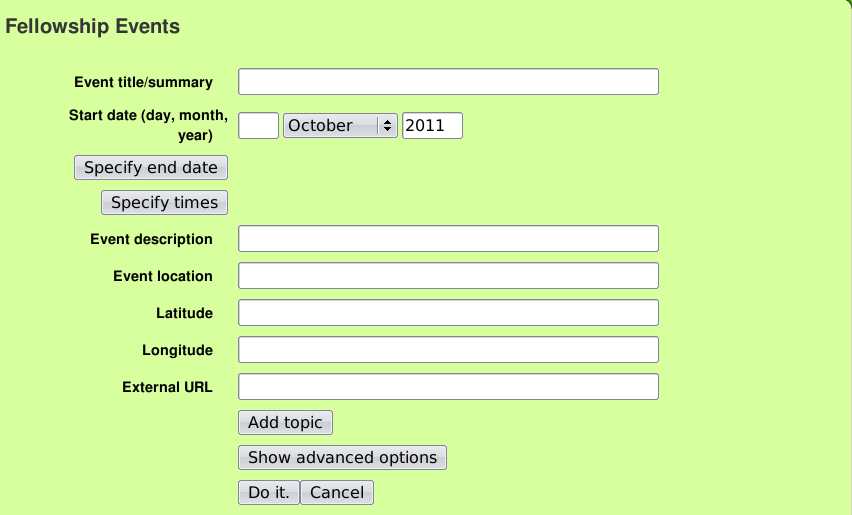

Well, it wasn’t as much of a rant as it was a moan about how there doesn’t seem to be a coherent strategy about Free Software on mobile devices. The FSFE has had some kind of campaign about Android for quite some time. What it amounts to is promoting Free Software applications and Free Software distributions on phones.

This probably isn’t significantly different from the activities promoted by the FSF, whose Defective By Design campaign features a gift guide promoting phones that run Free Software. The FSF also promotes and funds the Replicant project, more of which below.

For all I know, the situation about getting Free Software applications onto a phone probably isn’t all that dire, assuming that Google and phone vendors don’t try and prevent users from installing software that isn’t delivered via Google Play or other officially-sanctioned channels. Or as the Android documentation puts it:

Of course, this is rather reminiscent of the “bad old days” where some people could copy things on and off their phone using Bluetooth (or for those with particularly long memories, infrared communication) whereas others had those features disabled by their provider. So, while some people get to enjoy the benefits of Free Software, others are denied them: another case of divide-and-rule in action, I suppose.

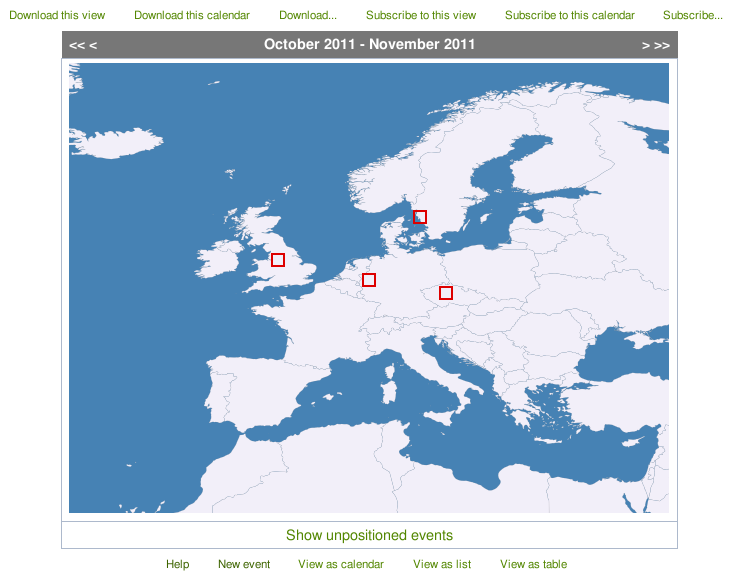

But it is the situation about Free Software distributions, more specifically having a Free Software operating system with Free Software device drivers and Free Software firmware, that is most worrying. The FSFE campaign points people to the two enduring initiatives for putting a different kind of Android distribution on phones: Replicant and LineageOS (previously CyanogenMod).

While LineageOS seems to try and support as many devices as possible, it relies on non-free software to support device hardware. Meanwhile, Replicant employs only Free Software and is therefore limited in which devices it may support and to what extent those device’s features will function.

Although I can’t really muster much enthusiasm for Android and its derivatives, I don’t think there is anything wrong with trying to provide a completely Free Software distribution of that software. Certainly, there will always be challenges with the evolution of the upstream code, being steered this way and that by its corporate parent for maximum corporate benefit, but this isn’t really much different to clinging on to the pace of change (and churn) in an openly-developed project like the Linux kernel.

But ultimately, these initiatives will always be reacting to what other people, specifically those working for large companies, have decided to do. It will always be about chasing the latest release of the upstream software and making it acceptable for a Free Software audience. And it will always be about seeing whether the latest devices can be supported or not and then trying to figure out how. And this is where most people start to wonder why things always have to be like this, spurring my rant.

For the Long Term

To be fair to the FSFE’s Android-related campaign, the advice given does give people some concrete activities to consider: it isn’t simply the “go out and write code” battle cry that sometimes drifts through the air after an acrimonious episode where nobody can agree with each other. Helping F-Droid get more applications published, writing more Free Software applications, helping the operating system projects with their efforts: these are all worthwhile things for people to do.

But we only need look at the FSF’s ethical gift guide to see the severe challenges being faced over the longer term. For yet another year, the only offerings are older, refurbished Samsung smartphones, the most recent being the Galaxy S3 and Galaxy Note 2 from 2012. Now, there is nothing wrong with older hardware or refurbished devices. After all, I have written about older and much less powerful hardware than that which I believe still should have a place in modern life.

Nor should people regard the price of such refurbished units as particularly expensive. Of course you can buy a new phone with better specifications for the same or even less, but that new phone won’t be running only Free Software. Yes, there is always the Fairphone whose creators’ grip on Free Software, software longevity and other matters that weren’t confronted in the beginning is supposedly now rather better, although the hardware drivers remain non-free, so it isn’t really comparable, either.

Here, it is illustrative to consider community-originating efforts to develop smartphones, particularly since there is a perception that such efforts eventually end up pitching “expensive” and “underpowered” devices that “aren’t competitive”. There are obviously a collection of such initiatives ongoing at any given point, but ignoring random crowdfunding campaigns and corporate publicity stunts, we might usefully focus on some more familiar projects: the GTA04 successor to the Openmoko FreeRunner and the Neo900 successor to the Nokia N900.

Both of these projects are in a not-easily-explained state. The GTA04 device was made in a number of incrementally refined versions and sold primarily to people who already had a FreeRunner into which they could install the new hardware. However, difficulties with the last hardware revision meant that it was no longer viable as a product, with the cost of overcoming production problems being rather likely to exceed any money otherwise made on the units.

Meanwhile, the Neo900 project is effectively stalled having experienced several setbacks, notably the freezing of funds by PayPal for no really good reason, and difficulties in finding and retaining qualified people to do things like board layout. Although there are aspirations to get to completion, perhaps with some modification of the original goals, the path to completion remains obscure and uncertain. It is certainly hard to sustain my initial enthusiasm for the project, even if I do sympathise deeply with the struggles and difficulties of those trying to deliver something that they want to see succeed perhaps more than anyone else.

The future is not necessarily entirely bleak for these projects, though. Experience from the GTA04 effort may have been beneficial to the development of the Pyra handheld computer, whose own genesis has been troubled at times and yet forthrightly communicated in an honourably transparent fashion by its initiator, and the CPU board for that device may end up as the basis for a new product known as the GTA15. Given the common architectural heritage of the GTA04, N900 and Neo900, it would not be completely inconceivable that if some kind of way forward could be found for the Neo900, GTA15 might be some kind of contributing element to that.

What these projects should illustrate, however, is that the foundations of a Free Software mobile device are difficult to prepare, subject to sudden and potentially catastrophic setbacks or outright failure, and they require persistence and plenty of resources, not least of the financial kind but also the dedication of people with the right competence and experience. Sadly, these projects never get the attention, the recognition, or the generosity they deserve.

Seeing the Bigger Picture

If we care about being able to support all the different elements of our phones with Free Software, instead of crossing out items in the specification list, sacrificing functionality because nobody knows how it works without signing a non-disclosure agreement and then only being allowed to release a binary blob for loading into specific Linux kernel versions, then we need to be there at the start, when the phone gets designed, and play a role in making sure that everything can be supported by Free Software. Spending time and effort on figuring out someone else’s hardware is not time and effort well spent.

Indeed, from the moment a proprietary product gets into the hands of developers, the clock is ticking. Already, given the pace of product development and shipment, the product is heading for obsolescence and its manufacturer will be tooling up to make its successor. Even if downstream developers work quickly and get as much of the hardware supported as they can, there will be only be a certain period before the product becomes difficult to obtain. And then the process of catch-up starts all over again with the next product.

Of course, product variations always used to happen with desktop and laptop computers. One day you’d get a laptop with one chipset and the next day the “same” laptop would contain something else. The only thing that eased the pain involved was broad hardware support for these kinds of devices, and even then there would be chipsets notorious for their lack of support in things like the Linux kernel.

Such pitfalls cultivated demands for products that could run Free Software and be supplied with such software instead of the usual proprietary products bundled as a consequence of Microsoft’s anticompetitive and coercive business practices. It was no longer enough to accept that we might buy a computer with bundled software and “install over it”, that this might emphasise our Free Software credentials. Credible advocates of Free Software have sought to identify vendors offering systems that are either already supplied with Free Software or that come without any preinstalled software at all, in both cases being fully capable of supporting a Free Software distribution.

But we now find ourselves in the absurd situation with mobile devices where remedial measures comparable to “install over it” are almost the only things people can suggest, that there really aren’t any mobile device vendors who can offer a bare, supportable device or one that is, say, already running Replicant and offering access to all of the device’s features. And although refurbished devices are sold that may run Replicant well enough, we lack another essential guarantee that may not have been so important in the past, one that community-originating hardware projects might be able to help with.

In being involved with the design of these devices, we can seek to dictate how long they remain viable. Instead of having a large corporation decide that now is the time for you to buy their next device and that the product you bought and liked is now deliberately unavailable, we can seek to keep making devices as long as they have a role and have people wanting to keep using them.

If something runs a Free Software distribution well enough, and if that device can still be made, it becomes a safe choice and something we can recommend to others. At last, we get some kind of certainty in a world whose stream of continual change is often fad-driven, exploitative and needless.

The Strategic Vacuum

So it seems obvious to me that if people want Free Software on phones, they need to cultivate the efforts to make that Free Software viable, which means cultivating sustainable hardware design and actually promoting and supporting the projects pursuing it. Otherwise, it is like trying to plant an orchard without paying attention to the soil, cursing that the trees will not grow whilst being oblivious to the fact that the ground is concrete.

And this goes beyond this particular domain. Free Software advocacy is all well and good, but there needs to be practical action that goes beyond pitching in and nudging things towards success. It is wonderful that collective effort and collaboration can take small endeavours and grow them into solutions that are useful for many, but it can be too much to expect everything to just coalesce as if by magic, that people and projects will just discover each other, work together, strengthen each other and multiply the benefits.

There needs to be a strategy, for people to identify real-world problems that are not being addressed by Free Software, to identify the projects that might be applied to those problems, and to propose actual solutions. In the absence of Free Software, proprietary and exploitative solutions are able to stake out their territory, entrench themselves and to thrive.

Here, I struggle to see which Free Software organisations have both the breadth of scope and the depth of focus to make a difference. Developer-driven organisations like Debian and KDE have a lot going on, and they deliver non-trivial software systems, and yet sustaining something like a viable mobile platform (encompassing hardware and software) is seemingly beyond their reach. Neither have a coherent answer to other significant challenges of our time, such as the dominance of proprietary (and destructive) communications platforms.

Meanwhile, advocacy-driven organisations like the FSFE merely give advice to people about how they might help Free Software. The FSF arguably combines this with actual development through the GNU project, and there are those lists of urgent activities, but one cannot help but have the impression that the urgency is reactive and not the product of strategic vision (beyond the goal of the proliferation of Free Software, of course). I would like to think that the Software Freedom Conservancy might combine things more effectively, but it perhaps remains more of an umbrella organisation with a similar emphasis on broad Free Software adoption.

I would like to think that the step of getting involved in Free Software is but the first step towards fairer, kinder, more transparent, more productive and more sustainable societies. Traces of such visions can be seen in the communications of various organisations and yet they largely hold back from suggesting what the next steps might be. And yet, I think, we will in future really need to take those steps in order to protect ourselves, our societies, and the things we care about.

In general, we notice the absence of strategy; in specific cases, we notice it keenly. Which organisations are willing and able to fill this strategic void coherently and decisively, to offer concrete solutions as opposed to suggestions, to have something definite to work towards, and to direct effort and resources into actually realising such goals? Surely, in this age of hoping for the best, those organisations will be the ones that deserve our support the most.