As noted previously, two of my interests in recent times have been computing history and microkernel-based operating systems. Having perused academic and commercial literature in the computing field a fair amount over the last few years, I experienced some feelings of familiarity when looking at the schedule for FOSDEM, which took place earlier in the year, brought about when encountering a talk in the “microkernel and component-based OS” developer room: “A microkernel-based orchestrator for distributed Internet services?”

In this talk’s abstract, mentions of the complexity of current Linux-based container solutions led me to consider the role of containers and virtual machines. In doing so, it brought back a recollection of a paper published in 1996, “Microkernels Meet Recursive Virtual Machines”, describing a microkernel-based system architecture called Fluke. When that paper was published, I was just starting out in my career and preoccupied with other things. It was only in pursuing those interests of mine that it came to my attention more recently.

It turned out that there were others at FOSDEM with similar concerns. Liam Proven, who regularly writes about computing history and alternative operating systems, gave a talk, “One way forward: finding a path to what comes after Unix”, that combined observations about the state of the computing industry, the evolution of Unix, and the possibilities of revisiting systems such as Plan 9 to better inform current and future development paths. This talk has since been summarised in four articles, concluding with “A path out of bloat: A Linux built for VMs” that links back to the earlier parts.

Both of these talks noted that in attempting to deploy applications and services, typically for Internet use, practitioners are now having to put down new layers of functionality to mitigate or work around limitations in existing layers. In other words, they start out with an operating system, typically based on Linux, that provides a range of features including support for multiple users and the ability to run software in an environment largely confined to the purview of each user, but end up discarding most of this built-in support as they bundle up their software within such things as containers or virtual machines, where the software can pretend that it has access to a complete environment, often running under the control of one or more specific user identities within that environment.

With all this going on, people should be questioning why they need to put one bundle of software (their applications) inside another substantial bundle of software (an operating system running in a container or virtual machine), only to deploy that inside yet another substantial bundle of software (an operating system running on actual hardware). Computing resources may be the cheapest they have ever been, supply chain fluctuations notwithstanding, but there are plenty of other concerns about building up levels of complexity in systems that should prevent us from using cheap computing as an excuse for business as usual.

A Quick Historical Review

In the early years of electronic computing, each machine would be dedicated to running a single program uninterrupted until completion, producing its results and then being set up for the execution of a new program. In this era, one could presumably regard a computer simply as the means to perform a given computation, hence the name.

However, as technology progressed, it became apparent that dedicating a machine to a single program in this way utilised computing resources inefficiently. When programs needed to access relatively slow peripheral devices such as reading data from, or writing data to, storage devices, the instruction processing unit would be left idle for significant amounts of cumulative time. Thus, solutions were developed to allow multiple programs to reside in the machine at the same time. If a running program had paused to allow data to transferred to or from storage, another program might have been given a chance to run until it also found itself needing to wait for those peripherals.

In such systems, each program can no longer truly consider itself as the sole occupant or user of the machine. However, there is an attraction in allowing programs to be written in such a way that they might be able to ignore or overlook this need to share a computer with other programs. Thus, the notion of a more abstract computing environment begins to take shape: a program may believe that it is accessing a particular device, but the underlying machine operating software might direct the program’s requests to a device of its own choosing, presenting an illusion to the program.

Although these large, expensive computer systems then evolved to provide “multiprogramming” support, multitasking, virtual memory, and virtual machine environments, it is worth recalling the evolution of computers at the other end of the price and size scale, starting with the emergence of microcomputers from the 1970s onwards. Constrained by the availability of affordable semiconductor components, these small systems at first tended to limit themselves to modest computational activities, running one program at a time, perhaps punctuated occasionally by interrupts allowing the machine operating software to update the display or perform other housekeeping tasks.

As microcomputers became more sophisticated, so expectations of the functionality they might deliver also became more sophisticated. Users of many of the earlier microcomputers might have run one application or environment at a time, such as a BASIC interpreter, a game, or a word processor, and what passed for an operating system would often only really permit a single application to be active at once. A notable exception in the early 1980s was Microware’s OS-9, which sought to replicate the Unix environment within the confines of 8-bit microcomputer architecture, later ported to the Motorola 68000 and used in, amongst other things, Philips’ CD-i players.

OS-9 offered the promise of something like Unix on fairly affordable hardware, but users of systems with more pedestrian software also started to see the need for capabilities like multitasking. Even though the dominant model of microcomputing, perpetuated by the likes of MS-DOS, had involved running one application to do something, then exiting that application and running another, it quickly became apparent that users themselves had multitasking impulses and were inconvenienced by having to finish off something, even temporarily, switch to another application offering different facilities, and then switch back again to resume their work.

Thus, the TSR and the desk accessory were born, even finding a place on systems like the Apple Macintosh, whose user interface gave the impression of multitasking functionality and allowed switching between applications, even though only a single application could, in general, run at a time. Later, Apple introduced MultiFinder with the more limited cooperative flavour of multitasking, in contrast to systems already offering preemptive multitasking of applications in their graphical environments. People may feel the compulsion to mention the Commodore Amiga in such contexts, but a slightly more familiar system from a modern perspective would be the Torch Triple X workstation with its OpenTop graphical environment running on top of Unix.

The Language System Phenomenon

And so, the upper and lower ends of the computing market converged on expectations that users might be able to run many programs at a time within their computers. But the character of these expectations might have been coloured differently from the prior experiences of each group. Traditional computer users might well have framed the environment of their programs in terms of earlier machines and environments, regarding multitasking as a convenience but valuing compatibility above all else.

At the lower end of the market, however, users were looking to embrace higher-level languages such as Pascal and Modula-2, these being cumbersome on early microprocessor systems but gradually becoming more accessible with the introduction of later systems with more memory, disk storage and processors more amenable to running such languages. Indeed, the notion of the language environment emerged, such as UCSD Pascal, accompanied by the portable code environment, such as the p-System hosting the UCSD Pascal environment, emphasising portability and defining a machine detached from the underlying hardware implementation.

Although the p-System could host other languages, it became closely associated with Pascal, largely by being the means through which Pascal could be propagated to different computer systems. While 8-bit microcomputers like the BBC Micro struggled with something as sophisticated as the p-System, even when enhanced with a second processor and more memory, more powerful machines could more readily bear the weight of the p-System, even prompting some to suggest at one time that it was “becoming the de facto standard operating system on the 68000”, supplied as standard on 68000-based machines like the Sage II and Sage IV.

Such language environments became prominent for a while, Lisp and Smalltalk being particularly fashionable, and with the emergence of the workstation concept, new and divergent paths were forged for a while. Liam Proven previously presented Wirth’s Oberon system as an example of a concise, efficient, coherent environment that might still inform the technological direction we might wish to take today. Although potentially liberating, such environments were also constraining in that their technological homogeneity – the imposition of a particular language or runtime – tended to exclude applications that users might have wanted to run. And although Pascal, Oberon, Lisp or Smalltalk might have their adherents, they do not all appeal to everyone.

Indeed, during the 1980s and even today, applications sell systems. There are plenty of cases where manufacturers ploughed their own furrow, believing that customers would see the merits in their particular set of technologies and be persuaded into adopting those instead of deploying the products they had in mind, only to see the customers choose platforms that supported the products and technologies that they really wanted. Sometimes, vendors doubled down on customisations to their platforms, touting the benefits of custom microcode to run particular programs or environments, ignoring that customers often wanted more generally useful solutions, not specialised products that would become uncompetitive and obsolete as technology more broadly progressed.

For all their elegance, language-oriented environments risked becoming isolated enclaves appealing only to their existing users: an audience who might forgive and even defend the deficiencies of their chosen systems. For example, image-based persistence, where software could be developed in a live environment and “persisted” or captured in an image or “world” for later use or deployment, remains a tantalising approach to software development that sometimes appeals to outsiders, but one can argue that it also brings risks in terms of reproducibility around software development and deployment.

If this sounds familiar to anyone old enough to remember the end of the 1990s and the early years of this century, probing this familiarity may bring to mind the Java bandwagon that rolled across the industry. This caused companies to revamp their product lines, researchers to shelve their existing projects, developers to encounter hostility towards the dependable technologies they were already using, and users to suffer the mediocre applications and user interfaces that all of this upheaval brought with it.

Interesting research, such as that around Fluke and similar projects, was seemingly deprioritised in favour of efforts that presumably attempted to demonstrate “research relevance” in the face of this emerging, everything-in-Java paradigm with its “religious overtones”. And yet, commercial application of supposedly viable “pure Java” environments struggled in the face of abysmal performance and usability.

The Nature of the Machine

Users do apparently value heterogeneity or diversity in their computing environments, to be able to mix and match their chosen applications, components and technologies. Today’s mass-market computers may have evolved from the microcomputers of earlier times, accumulating workstation, minicomputer and mainframe technologies along the way, and they may have incorporated largely sensible solutions in doing so, but it can still be worthwhile reviewing how high-end systems of earlier times addressed issues of deploying different kinds of functionality safely within the same system.

When “multiprogramming” became an essential part of most system vendors’ portfolios, the notion of a “virtual machine” emerged, this being the vehicle through which a user’s programs could operate or experience the machine while sharing it with other programs. Today, using our minicomputer or Unix-inspired operating systems, we think of a virtual machine as something rather substantial, potentially simulating an entire system with all its peculiarities, but other interpretations of the term were once in common circulation.

In the era when the mainframe reigned supreme, their vendors differed in their definitions of a virtual machine. International Computers Limited (ICL) revamped their product range in the 1970s in an attempt to compete with IBM, introducing their VME or Virtual Machine Environment operating system to run on their 2900 series computers. Perusing the literature related to VME reveals a system that emphasises different concepts to those we might recognise from Unix, even though there are also many similarities that are perhaps obscured by differences in terminology. Where we are able to contrast the different worlds of VME and Unix, however, is in the way that ICL chose to provide a Unix environment for VME.

As the end of the 1980s approached, once dominant suppliers with their closed software and solution ecosystems started to get awkward questions about Unix and “open systems”. The less well-advised, like Norway’s rising star, Norsk Data, refused to seriously engage with such trends, believing their own mythology of technological superiority, until it was too late to convince their customers switching to other platforms that they had suddenly realised that this Unix thing was worthwhile after all. ICL, meanwhile, only tentatively delivered a Unix solution for their top-of-the-line systems.

Six years after ICL’s Series 39 mainframe range was released, and after years of making a prior solution selectively available, ICL’s VME/X product was delivered, offering a hosted Unix environment within VME, broadly comparable with Amdahl’s UTS and IBM’s IX/370. Eventually, VME/X was rolled into OpenVME, acknowledging “open systems” rather like Digital’s OpenVMS, all without actually being open, as one of my fellow students once joked. Nevertheless, VME/X offers an insight into what a virtual machine is in VME and how ICL managed to map Unix concepts into VME.

Reading VME documentation, one gets the impression that, fundamentally, a virtual machine in the VME sense is really about giving an environment to a particular user, as opposed to a particular program. Each environment has its own private memory regions, inaccessible to other virtual machines, along with other regions that may be shared between virtual machines. Within each environment, a number of processes can be present, but unlike Unix processes, these are simply execution contexts or, in Unix and more general terms, threads.

Since the process is the principal abstraction in Unix through which memory is partitioned, it is curious that in VME/X, the choice was made to not map Unix processes to VME virtual machines. Instead, each “terminal user”, each “batch job” (not exactly a Unix concept), as well as “certain daemons” were given their own virtual machines. And when creating a new Unix process, instead of creating a new virtual machine, VME/X would in general create a new VME process, seemingly allowing each user’s processes to reside within the same environment and to potentially access each other’s memory. Only when privilege or user considerations applied, would a new process be initiated in a new virtual machine.

Stranger than this, however, is VME’s apparent inability to run multiple processes concurrently within the same virtual machine, even on multiprocessor systems, although processes in different virtual machines could run concurrently. For one process to suspend execution and yield to another in the same virtual machine, a special “process-switching call” instruction was apparently needed, providing a mechanism like that of green threads or fibers in other systems. However, I could imagine that this could have provided a mechanism for concealing each process’s memory regions from others by using this call to initiate a reconfiguration of the memory segments available in the virtual machine.

I have not studied earlier ICL systems, but it would not surprise me if the limitations of this environment resembled those of earlier generations of products, where programs might have needed to share a physical machine graciously. Thus, the heritage of the system and the expectations of its users from earlier times appear to have survived to influence the capabilities of this particular system. Yet, this Unix implementation was actually certified as compliant with the X/Open Portability Guide specifications, initially XPG3, and was apparently the first system to have XPG4 base compliance.

Partitioning by User

A tour of a system that might seem alien or archaic to some might seem self-indulgent, but it raises a few useful thoughts about how systems may be partitioned and the sophistication of such partitioning. For instance, VME seems to have emphasised partitioning by user, and this approach is a familiar and mature one with Unix systems, too. Traditionally, dedicated user accounts have been set up to run collections of associated programs. Web servers often tend to run in a dedicated account, typically named “apache” or “httpd”. Mail servers and database servers also tend to follow such conventions. Even Android has used distinct user accounts to isolate applications from each other.

Of course, when partitioning functionality by user in Unix systems, one must remember that all of the processes involved are isolated from each other, in that they do not share memory inadvertently, and that the user identity involved is merely associated with these processes: it does not provide a container for them in its own right. Indeed, the user abstraction is simply the way that access by these processes to the rest of the system is controlled, largely mediated by the filesystem. Thus, any such partitioning arrangement brings the permissions and access control mechanisms into consideration.

In the simplest cases, such as a Web server needing to be able to read some files, the necessary adjustments to groups or even the introduction of access control lists can be sufficient to confine the Web server to its own territory while allowing other users and programs to interact with it conveniently. For example, Web pages can be published and updated by adding, removing and changing files in the Web site directories given appropriate permissions. However, it is when considering the combination of servers or services, each traditionally operating under their own account, that administrators start to consider alternatives to such traditional approaches.

Let us consider how we might deploy multiple Web applications in a shared hosting environment. Clearly, it would be desirable to give all of these applications distinct user accounts so that they would not be able to interfere with each other’s files. In a traditional shared hosting environment, the Web application software itself might be provided centrally, with all instances of an application relying on the same particular version of the software. But as soon as the requirements for the different instances start to diverge – requiring newer or older versions of various components – they become unable to rely entirely on the centrally provided software, and alternative mechanisms for deploying divergent components need to be introduced.

To a customer of such a service having divergent requirements, the provider will suggest various recipes for installing new software, often involving language-specific packaging or building from source, with compilers available to help out. The packaging system of the underlying software distribution is then mostly being used by the provider itself to keep the operating system and core facilities updated. This then leads people to conclude that distribution packaging is too inflexible, and this conclusion has led people in numerous directions to try and address the apparently unmet needs of the market, as well as to try and pitch their own particular technology as the industry’s latest silver bullet.

There is arguably nothing to stop anyone deploying applications inside a user’s home directory or a subdirectory of the home directory, with /home/user/etc being the place where common configuration files are stored, /home/user/var being used for some kind of coordination, and so on. Many applications can be configured to work in another location. One problem is that this configuration is sometimes fixed within the software when it is built, meaning that generic packages cannot be produced and deployed in arbitrary locations.

Another is that many of the administrative mechanisms in Unix-like systems favour the superuser, rely on operating on software configured for specific, centralised locations, and only really work at the whole-machine level with a global process table, a global set of user identities, and so on. Although some tools support user-level activities, like the traditional cron utility, scheduling jobs on behalf of users, as far as I know, traditional Unix-like systems have never really let users define and run their own services along the same lines as is done for the whole system, administered by the superuser.

Partitioning by Container

If one still wants to use nicely distribution-packaged software on a per-user, per-customer or per-application basis, what tends to happen is that an environment is constructed that resembles the full machine environment, with this kind of environment existing in potentially many instances on the same system. In other words, just so that, say, a Debian package can be installed independently of the host system and any of its other users, an environment is constructed that provides directories like /usr, /var, /etc, and so on, allowing the packaging system to do its work and to provide the illusion of a complete, autonomous machine.

Within what might be called the Unix traditions, a few approaches exist to provide this illusion to a greater or lesser degree. The chroot mechanism, for instance, permits the execution of programs that are generally only able to see a section of the complete filesystem on a machine, located at a “changed root” in the full filesystem. By populating this part of the filesystem with files that would normally be found at the top level or root of the normal filesystem, programs invoked via the chroot mechanism are able to reference these files as if they were in their normal places.

Various limitations in the scope of chroot led to the development of such technologies as jails, Linux-VServer and numerous others, going beyond filesystem support for isolating processes, and providing a more comprehensive illusion of a distinct machine. Here, systems like Plan 9 showed how the Unix tradition might have evolved to support such needs, with Linux and other systems borrowing ideas such as namespaces and applying them in various, sometimes clumsy, ways to support the configuration of program execution environments.

Going further, technologies exist to practically simulate the experience of an entirely separate machine, these often bearing the “virtual machine” label in the vocabulary of our current era. A prime example of such a technology is KVM, available on Linux with the right kind of processor, which allows entire operating systems to run within another. Using a virtual machine solution of this nature is something of a luxury option for an application needing its own environment, being able to have precisely the software configuration of its choosing right down to the level of the kernel. One disadvantage of such full-fat virtual machines is the amount of extra software involved and those layers upon layers of programs and mechanisms, all requiring management and integration.

Some might argue for solutions where the host environment does very little and where everything of substance is done in one kind of virtual machine or other. But if all the virtual machines are being used to run the same general technology, such as flavours of Linux, one has to wonder whether it is worth keeping a distinct hypervisor technology around. That might explain the emergence of KVM as an attempt to have Linux act as a kind of hypervisor platform, but it does not excuse a situation where the hosting of entire systems is done in preference to having a more configurable way of deploying applications within Linux itself.

Some adherents of hypervisor technologies advocate the use of unikernels as a way of deploying lightweight systems on top of hypervisors, specialised to particular applications. Such approaches seem reminiscent of embedded application deployment, with entire systems being built and tuned for precisely one job: useful for some domains but not generally applicable or particularly flexible. And it all feels like the operating system is just being reinvented in a suboptimal, ad-hoc fashion. (Unikernels seem to feature prominently in the “microkernel and component-based OS” developer room at FOSDEM these days.)

Then there is the approach advocated in Liam Proven’s talk, of stripping down an operating system for hypervisor deployment, which would need to offer a degree of extra flexibility to be more viable than a unikernel approach, at least when applied to the same kinds of problems. Of course, this pushes hardware support out of the operating system and into the realm of the hypervisor, which could be beneficial if done well, or it could imperil support for numerous hardware platforms and devices due to numerous technological, economic and social reasons. Liam advocates pushing filesystem support out of the kernel, and potentially out of the operating system as well, although it is not clear what would then need to take up that burden and actually offer filesystem facilities.

Some Reflections

This is where we may return to those complaints about the complexity of modern hosting frameworks. That a need for total flexibility in every application’s software stack presents significant administrative challenges. But in considering the nature of the virtual machine in its historical forms, we might re-evaluate what kind of environment software really needs.

In my university studies, a project of mine investigated a relatively hot topic at the time: mobile software agents. One conclusion I drew from the effort was that programs could be written to use a set of well-defined interfaces and to potentially cooperate with other programs, without thousands of operating system files littering their shared environment. Naturally, such programs would not be running by magic: they would need to be supported by infrastructure that allows them to be loaded and executed, but all of this infrastructure can be maintained outside the environment seen by these programs.

At the time, I relied upon the Python language runtime for my agent programs with its promising but eventually inadequate support for safe execution to prevent programs from seeing the external machine environment. Most agent frameworks during this era were based on particular language technologies, and the emergence of Java only intensified the industry’s focus on this kind of approach, naturally emphasising Java, although Inferno also arrived at around this time and offered a promising, somewhat broader foundation for such work than the Java Virtual Machine.

In the third part of his article series, Liam Proven notes that Plan 9, Inferno’s predecessor, is able to provide a system where “every process is in a container” by providing support for customisable process namespaces. Certainly, one can argue that Plan 9 and Inferno have been rather overlooked in recent years, particularly by the industry mainstream. He goes on to claim that such functionality, potentially desirable in application hosting environments, “makes the defining features of microkernels somewhat irrelevant”. Here I cannot really agree: what microkernels actually facilitate goes beyond what a particular operating system can do and how it has been designed.

A microkernel-based approach not only affords the opportunity to define the mechanisms of any resulting system, but it also provides the ability to define multiple sets of mechanisms, all of them potentially available at once, allowing them to be investigated, compared, and even combined. For example, Linux retains the notion of a user of the system, maintaining a global registry of such users, and even with notionally distinct sets of users provided by user namespaces, cumbersome mappings are involved to relate those namespace users back to this global registry. In a truly configurable system, there can be multiple user authorities, each being accessible by an arbitrary selection of components, and some components can be left entirely unaware of the notion of a user whatsoever.

Back in the 1990s, much coverage was given to the notion of operating system personalities. That various products would, for example, support DOS or Windows applications as well as Macintosh ones or Unix ones or OS/2 ones. Whether the user interface would reflect this kind of personality on a global level or not probably kept some usability professionals busy, and I recall one of my university classmates talking about a system where it was apparently possible to switch between Windows or maybe OS/2 and Macintosh desktops with a key combination. Since his father was working at IBM, if I remember correctly, that could have been an incarnation of IBM’s Workplace OS.

Other efforts were made to support multiple personalities in the same system, potentially in a more flexible way than having multiple separate sessions, and certainly more flexible than just bundling up, virtualising or emulating the corresponding environments. Digital investigated the porting of VMS functionality to an environment based on the Mach 3.0 microkernel and associated BSD Unix facilities. Had Digital eventually adopted a form of OSF/1 based on Mach 3.0, it could have conceivably provided a single system running Unix and VMS software alongside each other, sharing various common facilities.

Regardless of one’s feelings about Mach 3.0, whether one’s view of microkernels is formed from impressions of an infamous newsgroup argument from over thirty years ago, or whether it considers some of the developments in the years since, combining disparate technologies in a coherent fashion within the same system must surely be a desirable prospect. Being able to do so without piling up entire systems on top of each other and drilling holes between the layers seems like a particularly desirable thing to do.

A flexible, configurable environment should appeal to those in the same position as the FOSDEM presenter wishing to solve his hosting problems with pruned-down software stacks, as well as appealing to anyone with their own unrealised ambitions for things like mobile software agents. Naturally, such a configurable environment would come with its own administrative overheads, like the need to build and package applications for deployment in more minimal environments, and the need to keep software updated once deployed. Some of that kind of work should arguably get done under the auspices of existing distribution frameworks and initiatives, as opposed to having random bundles of software pushed to various container “hubs” posing as semi-official images, all the while weighing down the Internet with gigabytes of data constantly scurrying hither and thither.

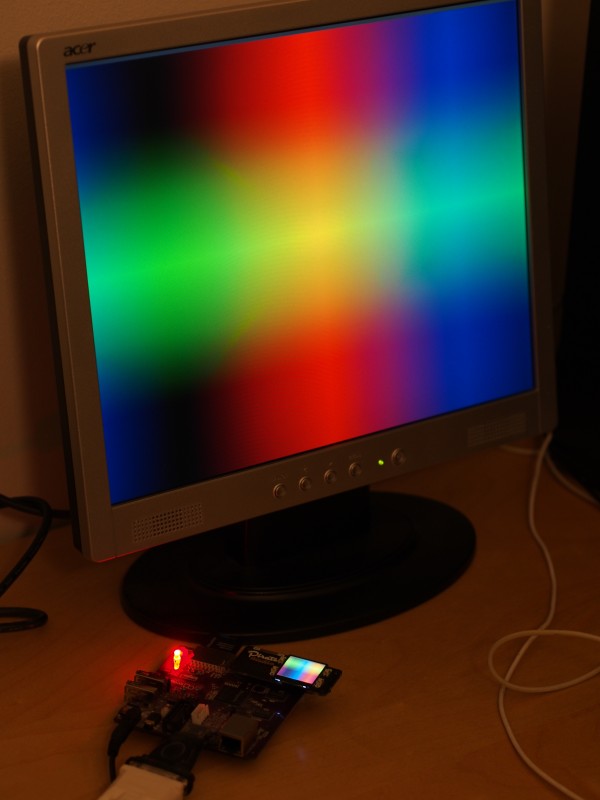

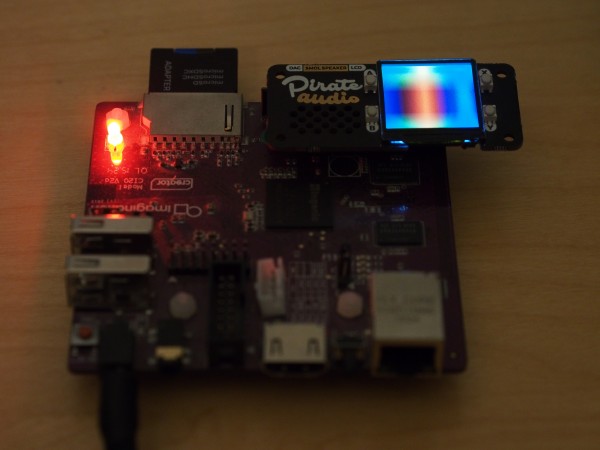

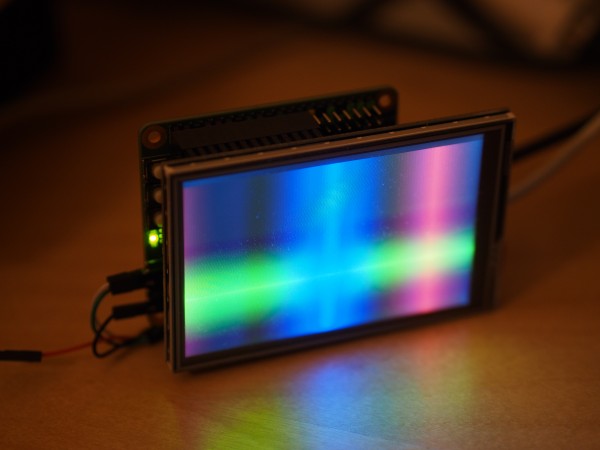

This article does not propose any specific solution or roadmap for any of this beyond saying that something should indeed be done, and that microkernel-based environments, instead of seeking to reproduce Unix or Windows all over again, might usefully be able to provide remedies that we might consider. And with that, I suppose I should get back to my own experiments in this area.