On forms of apparent progress

Sunday, May 8th, 2022Over the years, I have had a few things to say about technological change, churn, and the appearance of progress, a few of them touching on the evolution and development of the Python programming language. Some of my articles have probably seemed a bit outspoken, perhaps even unfair. It was somewhat reassuring, then, to encounter the reflections of a longstanding author of Python books and his use of rather stronger language than I think I ever used. It was also particularly reassuring because I apparently complain about things in far too general a way, not giving specific examples of phenomena for anything actionable to be done about them. So let us see whether we can emerge from the other end of this article in better shape than we are at this point in it.

Now, the longstanding author in question is none other than Mark Lutz whose books “Programming Python” and “Learning Python” must surely have been bestsellers for their publisher over the years. As someone who has, for many years, been teaching Python to a broad audience of newcomers to the language and to programming in general, his views overlap with mine about how Python has become increasingly incoherent and overly complicated, as its creators or stewards pursue some kind of agenda of supposed improvement without properly taking into account the needs of the broadest reaches of its user community. Instead, as with numerous Free Software projects, an unscrutable “vision” is used to impose change based on aesthetics and contemporary fashions, unrooted in functional need, by self-appointed authorities who often lack an awareness or understanding of historical precedent or genuine user need.

Such assertions are perhaps less kind to Python’s own developers than they should be. Those choosing to shoehorn new features into Python arguably have more sense of precedent than, say, the average desktop environment developer imitating Apple in what could uncharitably be described as an ongoing veiled audition for a job in Cupertino. Nevertheless, I feel that language developers would be rather more conservative if they only considered what teaching their language to newcomers entails or what effect their changes have on the people who have written code in their language. Am I being unfair? Let us read what Mr Lutz has to say on the matter:

The real problem with Python, of course, is that its evolution is driven by narcissism, not user feedback. That inevitably makes your programs beholden to ever-shifting whims and ever-hungry egos. Such dependencies might have been laughable in the past. In the age of Facebook, unfortunately, this paradigm permeates Python, open source, and the computer field. In fact, it extends well beyond all three; narcissism is a sign of our times.

You won’t find a shortage of similar sentiments on his running commentary of Python releases. Let us, then, take a look at some experiences and try to review such assertions. Maybe I am not being so unreasonable (or impractical) in my criticism after all!

Out in the Field

In a recent job, of which more might be written another time, Python was introduced to people more familiar with languages such as R (which comes across as a terrible language, but again, another time perhaps). It didn’t help that as part of that introduction, they were exposed to things like this:

def method(self, arg: Dict[Something, SomethingElse]):

return arg.items()

When newcomers are already having to digest new syntax, new concepts (classes and objects!), and why there is a “self” parameter, unnecessary ornamentation such as the type annotations included in the above, only increases the cognitive burden. It also doesn’t help to then say, “Oh, the type declarations are optional and Python doesn’t really check them, anyway!” What is the student supposed to do with that information? Many years ago now, Java was mocked for confronting its newcomers with boilerplate like this classic:

public static void main(String[] args)

But exposing things that the student is then directed to ignore is simply doing precisely the same thing for which Java was criticised. Of course, in Python, the above method could simply have been written as follows:

def method(self, arg):

return arg.items()

Indeed, for the above method to be valid in the broadest sense, the only constraint on the nature of the “arg” parameter is that it offer an attribute called “items” that can be called with no arguments. By prescriptively imposing a limitation on “arg” as was done above, insisting that it be a dictionary, the method becomes less general and less usable. Moreover, the nature of Python itself is neglected or mischaracterised: the student might believe that only a certain type would be acceptable, just as one might suggest that the author of that code also fails to see that a range of different, conformant kinds of objects could be used with the method. Such practices discourage or conceal polymorphism and generic functionality at a point when the beginner’s mind should be opened to them.

As Mr Lutz puts such things in the context of a different feature introduced in Python 3.5:

To put that another way: unless you’re willing to try explaining a new feature to people learning the language, you just shouldn’t do it.

The tragedy is that Python in its essential form is a fairly intuitive and readable language. But as he also says in the specific context of type annotations:

Thrashing (and rudeness) aside, the larger problem with this proposal’s extensions is that many programmers will code them—either in imitation of other languages they already know, or in a misguided effort to prove that they are more clever than others. It happens. Over time, type declarations will start appearing commonly in examples on the web, and in Python’s own standard library. This has the effect of making a supposedly optional feature a mandatory topic for every Python programmer.

And I can certainly say from observation that in various professional cultures, including academia where my own recent observations were made, there is a persistent phenomenon where people demonstrate “best practice” to show that they as a software development practitioner (or, indeed, a practitioner of anything else related to the career in question) are aware of the latest developments, are able to communicate them to their less well-informed colleagues, and are presumably the ones who should be foremost in anyone’s consideration for any future hiring round or promotion. Unfortunately, this enthusiasm is not always tempered by considered reflection, either on the nature of the supposed innovation itself, or on the consequences its proliferation will have.

Perversely, such enthusiasm, provoked by the continual hustle for funding, positions, publications and reputation, risks causing a trail of broken programs, and yet at the same time, much is made of the need for software development to be done “properly” in academia, that people do research that is reproducible and whose computational elements are repeatable. It doesn’t help that those ambitions must also be squared with other apparent needs such as offering tools and services to others. And the need to offer such things in a robust and secure fashion sometimes has to coexist with the need to offer them in a convenient form, where appropriate. Taking all of these things into consideration is quite the headache.

A Positive Legacy

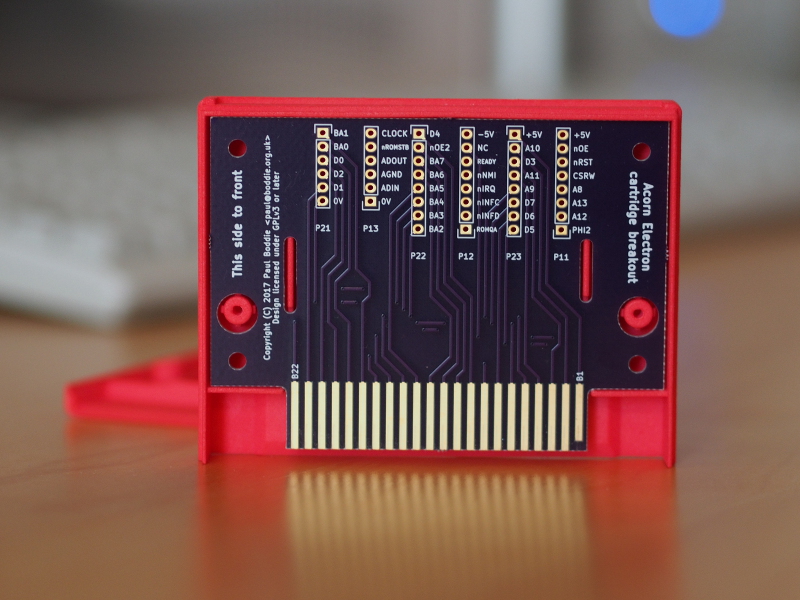

Amusingly, some have come to realise that Python’s best hope for reproducible research is precisely the thing that Python’s core developers have abandoned – Python 2.7 – and precisely because they have abandoned it. In an article about reproducing old, published results, albeit of a rather less than scientific nature, Nicholas Rougier sought to bring an old program back to life, aiming to find a way of obtaining or recovering the program’s sources, constructing an executable form of the program, and deploying and running that program on a suitable system. To run his old program, written for the Apple IIe microcomputer in Applesoft BASIC, required the use of emulators and, for complete authenticity, modern hardware expansions to transfer the software to floppy disks to run on an original Apple IIe machine.

And yet, the ability to revive and deploy a program developed 32 years earlier was possible thanks to the Apple machine’s status as a mature, well-understood platform with an enthusiastic community developing new projects and products. These initiatives were only able to offer such extensive support for a range of different “retrocomputing” activities because the platform has for a long time effectively been “frozen”. Contrasting such a static target with rapidly evolving modern programming languages and environments, Rougier concluded that “an advanced programming language that is guaranteed not to evolve anymore” would actually be a benefit for reproducible science, that few people use many of the new features of Python 3, and that Python 2.7 could equally be such a “highly fertile ground for development” that the proprietary Applesoft BASIC had proven to be for a whole community of developers and users.

Naturally, no language designer ever wants to be told that their work is finished. Lutz asserts that “a bloated system that is in a perpetual state of change will eventually be of more interest to its changers than its prospective users”, which is provocative but also rings true. CPython (the implementation of Python in the C programming language) has always had various technical deficiencies – the lack of proper multithreading, for instance – but its developers who also happen to be the language designers seem to prefer tweaking the language instead. Other languages have gained in popularity at Python’s expense by seeking to address such deficiencies and to meet the frustrated expectations of Python developers. Or as Lutz notes:

While Python developers were busy playing in their sandbox, web browsers mandated JavaScript, Android mandated Java, and iOS became so proprietary and closed that it holds almost no interest to generalist developers.

In parts of academia familiar with Python, languages like Rust and Julia are now name-dropped, although I doubt that many of those doing the name-dropping realise what they are in for if they decide to write everything in Rust. Meanwhile, Python 2 code is still used, against a backdrop of insistent but often ignored requests from systems administrators for people to migrate code to Python 3 so that newer operating system distributions can be deployed. In other sectors, such migration is meant to be factored into the cost of doing business, but in places like academia where software maintenance generally doesn’t get funding, no amount of shaming or passive-aggressive coercion is magically going to get many programs updated at all.

Of course, we could advocate that everybody simply run their old software in virtual machines or containers, just as was possible with that Applesoft BASIC program from over thirty years ago. Indeed, containerisation is the hot thing in places like academia just as it undoubtedly is elsewhere. But unlike the Apple II community who will hopefully stick with what they know, I have my doubts that all those technological lubricants marketed under the buzzword “containers!” will still be delivering the desired performance decades from now. As people jump from yesterday’s hot solution to today’s and on to tomorrow’s (Docker, with or without root, to Singularity/Apptainer, and on to whatever else we have somehow deserved), just the confusion around the tooling will be enough to make the whole exercise something of an ordeal.

A Detour to the Past

Over the last couple of years, I have been increasingly interested in looking back over the course of the last few decades, back to the time when I was first introduced to microcomputers, and even back beyond that to the age of mainframes when IBM reigned supreme and the likes of ICL sought to defend their niche and to remain competitive, or even relevant, as the industry shifted beneath them. Obviously, I was not in a position to fully digest the state of the industry as a schoolchild fascinated with the idea that a computer could seemingly take over a television set and show text and graphics on the screen, and I was certainly not “taking” all the necessary computing publications to build up a sophisticated overview, either.

But these days, many publications from decades past – magazines, newspapers, academic and corporate journals – are available from sites like the Internet Archive, and it becomes possible to sample the sentiments and mood of the times, frustrations about the state of then-current, affordable technology, expectations of products to come, and so on. Those of us who grew up in the microcomputing era saw an obvious progression in computing technologies: faster processors, more memory, better graphics, more and faster storage, more sophisticated user interfaces, increased reliability, better development tools, and so on. Technologies such as Unix were “the future”, labelled as impending to the point of often being ridiculed as too expensive, too demanding or too complicated, perhaps never to see the limelight after all. People were just impatient: we got there in the end.

While all of that was going on, other trends were afoot at the lowest levels of computing. Computer instruction set architectures had become more complicated as the capabilities they offered had expanded. Although such complexity, broadly categorised using labels such as CISC, had been seen as necessary or at least desirable to be able to offer system implementers a set of convenient tools to more readily accomplish their work, the burden of delivering such complexity risked making products unreliable, costly and late. For example, the National Semiconductor 32016 processor, seeking to muscle in on the territory of Digital Equipment Corporation and its VAX line of computers, suffered delays in getting to market and performance deficiencies that impaired its competitiveness.

Although capable and in some respects elegant, it turned out that these kinds of processing architectures were not necessarily delivering what was actually important, either in terms of raw performance for end-users or in terms of convenience for developers. Realisations were had that some of the complexity was superfluous, that programmers did not use certain instructions often or at all, and that a flawed understanding of programmers’ needs had led to the retention of functionality that did not need to be inscribed in silicon with all the associated costs and risks that this would entail. Instead, simpler, more orthogonal architectures could be delivered that offered instructions that programmers or, crucially, their compilers would actually use. The idea of RISC was thereby born.

As the concept of RISC took off, pursued by the likes of IBM, UCB and Sun, Stanford University and MIPS, Acorn (and subsequently ARM), HP, and even Digital, Intel and Motorola, amongst others, the concept of the workstation became more fully realised. It may have been claimed by some commentator or other that “the personal computer killed the workstation” or words to that effect, but in fact, the personal computer effectively became the workstation during the course of the 1990s and early years of the twenty-first century, albeit somewhat delayed by Microsoft’s sluggish delivery of appropriately sophisticated operating systems throughout its largely captive audience.

For a few people in the 1980s, the workstation vision was the dream: the realisation of their expectations for what a computer should do. Although expectations should always be updated to take new circumstances and developments into account, it is increasingly difficult to see the same rate of progress in this century’s decades that we saw in the final decades of the last century, at least in terms of general usability, stability and the emergence of new and useful computational capabilities. Some might well argue that graphics and video processing or networked computing have progressed immeasurably, these certainly having delivered benefits for visualisation, gaming, communications and the provision of online infrastructure, but in other regards, we seem stuck with something very familiar to that of twenty years ago but with increasingly disillusioned developers and disempowered users.

What we might take away from this historical diversion is that sometimes a focus on the essentials, on simplicity, and on the features that genuinely matter make more of a difference than just pressing ahead with increasingly esoteric and baroque functionality that benefits few and yet brings its own set of risks and costs. And we should recognise that progress is largely acknowledged only when it delivers perceptable benefits. In terms of delivering a computer language and environment, this may necessarily entail emphasising the stability and simplicity of the language, focusing instead on remedying the deficiencies of the underlying language technology to give users the kind of progress they might actually welcome.

A Dark Currency

Mark Lutz had intended to stop commentating on newer versions of Python, reflecting on the forces at work that makes Python what it now is:

In the end, the convolution of Python was not a technical story. It was a sociological story, and a human story. If you create a work with qualities positive enough to make it popular, but also encourage it to be changed for a reward paid entirely in the dark currency of ego points, time will inevitably erode the very qualities which made the work popular originally. There’s no known name for this law, but it happens nonetheless, and not just in Python. In fact, it’s the very definition of open source, whose paradigm of reckless change now permeates the computing world.

I also don’t know of a name for such a law of human behaviour, and yet I have surely mentioned such behavioural phenomena previously myself: the need to hustle, demonstrate expertise, audition for some potential job offer, demonstrate generosity through volunteering. In some respects, the cultivation of “open source” as a pragmatic way of writing software collaboratively, marginalising Free Software principles and encouraging some kind of individualistic gift culture coupled to permissive licensing, is responsible for certain traits of what Python has become. But although a work that is intrinsically Free Software in nature may facilitate chaotic, haphazard, antisocial, selfish, and many other negative characteristics in the evolution of that work, it is the social and economic environment around the work that actually promotes those characteristics.

When reflecting on the past, particularly during periods when capabilities were being built up, we can start to appreciate the values that might have been more appreciated at that time than they are now. Python originated at a time when computers in widespread use were becoming capable enough to offer such a higher-level language, one that could offer increased convenience over various systems programming languages whilst building on top of the foundations established by those languages. With considerable effort having been invested in such foundations, a mindset seemed to persist, at least in places, that such foundations might be enduring and be good for a long time.

An interesting example of such attitudes arose at a lower level with the development of the Alpha instruction set architecture. Digital, having responded ineffectively to its competitive threats, embraced the RISC philosophy and eventually delivered a processor range that could be used to support its existing product line-up, emphasising performance and longevity through a “15- to 25-year design horizon” that attempted to foresee the requirements of future systems. Sadly, Digital made some poor strategic decisions, some arguably due to Microsoft’s increasing influence over the company’s strategy, and after a parade of acquisitions, Alpha fell under the control of HP who sacrificed it, along with its own RISC architecture, to commit to Intel’s dead-end Itanium architecture. I suppose this illustrates that the chaos of “open source” is not the only hazard threatening stability and design for longevity.

Such long or distant horizons demand that newer developments remain respectful to the endeavours that have made them possible. Such existing and ongoing endeavours may have their flaws, but recognising and improving those flaws is more constructive and arguably more productive than tearing everything down and demanding that everything be redone to accommodate an apparently new way of thinking. Sadly, we see a lot of the latter these days, but it goes beyond a lack of respect for precedent and achievement, reflecting broader tendencies in our increasingly stressed societies. One such tendency is that of destructive competition, the elimination of competitors, and the pursuit of monopoly. We might be used to seeing such things in the corporate sphere – the likes of Microsoft wanting to be the only ones who provide the software for your computer, no matter where you buy it – but people have a habit of imitating what they see, especially when the economic model for our societies increasingly promotes the hustle for work and the need to carve out a lucrative niche.

So, we now see pervasive attitudes such as the pursuit of the zero-sum game. Where the deficiencies of a technology lead its users to pursue alternatives, defensiveness in the form of utterances such as “no need to invent another language” arises. Never mind that the custodians of the deficient technology – in this case, Python, of course – happily and regularly offer promotional consideration to a company who openly tout their own language for mobile development. Somehow, the primacy of the Python language is a matter for its users to bear, whereas another rule applies amongst its custodians. That is another familiar characteristic of human behaviour, particularly where power and influence accumulates.

And so, we now see hostility towards anything being perceived as competition, even if it is merely an independent endeavour undertaken by someone wishing to satisfy their own needs. We see intolerance for other solutions, but we also see a number of other toxic behaviours on display: alpha-dogging, personality worship and the cultivation of celebrity. We see chest-puffing displays of butchness about Important Matters like “security”. And, of course, the attitude to what went before is the kind of approach that involves boiling the oceans so that it may be populated by precisely the right kind of fish. None of this builds on or complements what is already there, nor does it deliver a better experience for the end-user. No wonder people say that they are jealous of colleagues who are retiring.

All these things make it unappealing to share software or even ideas with others. Fortunately, if one does not care about making a splash, one can just get on with things that are personally interesting and ignore all the “negativity from ignorant, opinionated blowhards”. Although in today’s hustle culture, this means also foregoing the necessary attention that might prompt anyone to discover your efforts and pay you to do such work. On the actual topic that has furnished us with so many links to toxic behaviour, and on the matter of the venue where such behaviour is routine, I doubt that I would want my own language-related efforts announced in such a venue.

Then again, I seem to recall that I stopped participating in that particular venue after one discussion had a participant distorting public health observations by the likes of Hans Rosling to despicably indulge in poverty denial. Once again, broader social, economic and political influences weigh heavily on our industry and communities, with people exporting their own parochial or ignorant views globally, and in the process corrupting and undermining other people’s societies, oblivious to the misery it has already caused in their own. Against this backdrop, simple narcissism is perhaps something of a lesser concern.

At the End of the Tunnel

I suppose I promised some actionable observations at the start of the article, so what might they be?

Respect Users and Investments

First of all, software developers should be respectful towards the users of their software. Such users lend validation to that software, encourage others to use it, and they potentially make it possible for the developers to work on it for a living. Their use involves an investment that, if written off by the developers, is costly for everyone concerned.

And no, the users’ demands for that investment to be protected cannot be disregarded as “entitlement”, even if they paid nothing to acquire the software, at least if the developers are happy to enjoy all the other benefits of the software’s proliferation. As is often said, power and influence bring responsibility. Just as democratically elected politicians have a responsibility towards everyone they represent, regardless of whether those people voted for them or not, software developers have a duty of care towards all of their users, even if it is merely to step out of the way and to let the users take the software in its own direction without seeking to frustrate them as we saw when Python 2 was cast aside.

Respond to User Needs Constructively

Developers should also be responsive to genuine user needs. If you believe all the folklore about the “open source” way, it should have been precisely people’s own genuine needs that persuaded them to initiate their own projects in the first place. It is entirely possible that a project may start with one kind of emphasis and demand one kind of skills only to evolve towards another emphasis or to require other skills. With Python, much of the groundwork was laid in the 1990s, building an interpreter and formulating a capable language. But beyond that initial groundwork, the more pressing challenges lay outside the language design domain and went beyond the implementation of a simple interpreter.

Improved performance and concurrency, both increasingly expected by users, required the application of other skills that might not have been present in the project. And yet, the elaboration of the language continued, with the developers susceptible to persuasion by outsiders engaging in “alpha-dogging” or even insiders with an inferiority complex, being made to feel that the language was not complete or even adequate since it lacked features from the pet languages of those outsiders or of the popular language of the day. Development communities should welcome initiatives to improve their projects in ways that actually benefit the users, and they should resist the urge to muscle in on such initiatives by seeking to demonstrate that they have the necessary solutions when their track record would indicate otherwise. (Or worse still, by reframing user needs in terms of their own narrow agenda as if to say, “Here is what you are really asking for.” Another familiar trait of the “visionary” desktop developer.)

Respect Other Solutions

Developers and commentators more generally should accept and respect the existence of other technologies and solutions. Just because they have their own favourite solution does not de-legitimise something they have just been made aware of. Maybe it is simply not meant for them. After all, not everything that happens in this reality is part of a performance exclusively for any one person’s benefit, despite what some people appear to think. And the existence of other projects doing much the same thing is not necessarily “wasted effort”: another concept introduced from some cult of economics or other.

It is entirely possible to provide similar functionality in different ways, and the underlying implementations may lend those different projects different characteristics – portability, adaptability, and so on – even if the user sees largely the same result on their screen. Maybe we do want to encourage different efforts even for fundamental technologies or infrastructure, not because anyone likes to “waste effort”, but because it gives the systems we build a level of redundancy and resilience. And maybe some people just work better with certain other people. We should let them, as opposed to forcing them to fit in with tiresome, exploitative and time-wasting development cultures, to suffer rudeness and general abuse, simply to go along with an exercise that props up some form of corporate programme of minimal investment in the chosen solution of industry and various pundits.

Develop for the Long Term and for Stability

Developers should make things that are durable so that they may be usable for many years to come. Or they should at least expect that people may want to use them years or even decades from now. Just because something is old does not mean it is bad. Much of what we use today is based on technology that is old, with much of that technology effectively coming of age decades ago. We should be able to enjoy the increased performance of our computers, not have it consumed by inefficient software that drives the hardware and other software into obsolescence. Technological fads come and go (and come back again): people in the 1990s probably thought that virtual reality would be pervasive by now, but experience should permit us to reflect and to recognise that some things were (and maybe always will be) bad ideas and that we shouldn’t throw everything overboard to pander to them, only to regret doing so later.

We live in a world where rapid and uncomfortable change has been normalised, but where consumerism has been promoted as the remedy. Perhaps some old way of doing something mundane doesn’t work any more – buying something, interacting with public agencies, fulfilling obligations, even casting votes in some kinds of elections – perhaps because someone has decided that money can be saved (and, of course, soon wasted elsewhere) if it can be done “digitally” from now on. To keep up, you just need a smartphone, or a newer smartphone, with an “app”, or the new “app”, and a subscription to a service, and another one. And so on. All of that “works” for people as long as they have the necessarily interest, skills, time, and money to spend.

But as the last few years have shown, it doesn’t take much to disrupt these unsatisfactory and fragile arrangements. Nobody advocating fancy “digital” solutions evidently considered that people would not already have everything they need to access their amazing creations. And when, as they say, neither love nor money can get you the gadgets you need, it doesn’t even matter how well-off you are: suddenly you get a downgrade in experience to a level that, as a happy consumer, you probably didn’t even know still existed, even if it is still the reality for whole sections of our societies. We have all seen how narrow the margins are between everything apparently being “just fine” and there being an all-consuming crisis, both on a global level and, for many, on a personal level, too.

Recognise Responsibilities to Others

Change can be a positive thing if it carries everyone along and delivers actual progress. Meanwhile, there are those who embrace disruption as a form of change, claiming it to be a form of progress, too, but that form of change is destructive, harmful and exclusionary. It should not be a surprise that prominent advocates of a certain political movement advocate such disruptive change: for them, it doesn’t matter how many people suffer by the ruinous change they have inflicted on everyone as long as they are the ones to benefit; everyone else can wait fifty years or so to see some kind of consolation for the things taken from them, apparently.

As we deliver technology to others, we should not be the ones deepening any misery already experienced by imposing needless and costly change. We should be letting people catch up with the state of technology and allowing them to be comfortable with it. We should invest in long-term solutions that address people’s needs, and we should refuse to be shamed into playing the games of opportunists and profiteers who ridicule anything old or familiar in favour of what they happen to be promoting today. We should demand that people’s investments in hardware and software be protected, that they are not effectively coerced into constantly buying new things and seeing their their living standards diminished in other ways, with such consumption burdening our planet’s ecosystem and resources.

Just as we all experience that others have power over us, so we might recognise the power we have over other people. And just as we might expect others to consider our interests, so we might consider the interests of those who have to put up with our decisions. Maybe, in the end, all I am doing is asking for people to show some consideration for the experiences of other people, that their lives not be made any harder than they might already be. Is that really too much to ask? Is that so hard to understand?