The Unplanned Obsolescence of the First Fairphone Device

Friday, December 12th, 2014About a year-and-a-half ago, I gave my impressions of the Fairphone, noting that the initiative was worthy in terms of its social and sustainability goals, but that it had neglected the “fairness” of the software to be provided with each device. Although the Fairphone organisation had made “root access” – or more correctly stated, “owner control” – of the device a priority and had decided to provide its user interface enhancements to Android as Free Software, it had chosen to use a set of hardware technologies with a poor record of support for Free Software.

It might be said that such an initiative cannot possibly hope to act in the most prudent manner in every respect. However, unlike expertise in minerals sourcing, complicated global supply chains, and proprietary manufacturing activities, expertise on matters of hardware support for Free Software is available almost in abundance to anyone who can be bothered to ask. Many people already struggle with poorly-supported hardware for which only binary firmware or driver releases are available from the manufacturer, often resulting in incorrectly-performing hardware with no chance of future fixes as the manufacturer discontinues support in order to focus on selling new products. Others struggle with continuing but inconvenient forms of support on the manufacturer’s own timescale and terms.

Consequently, there are increasing numbers of people with experience of reverse engineering, documenting, and reimplementing firmware and drivers for proprietary hardware, many of whom would only be too happy to share their experiences with others wishing to avoid the pitfalls of being tied to a proprietary hardware vendor with a proprietary software mentality. There are also communities developing open hardware who seek out enlightened hardware vendors that encourage Free Software drivers for their products and may even support firmware that is also Free Software on their devices. There are even people developing smartphones in the open whose experiences and opinions would surely be valuable to anyone needing advice on the more reliable, open and trustworthy hardware vendors.

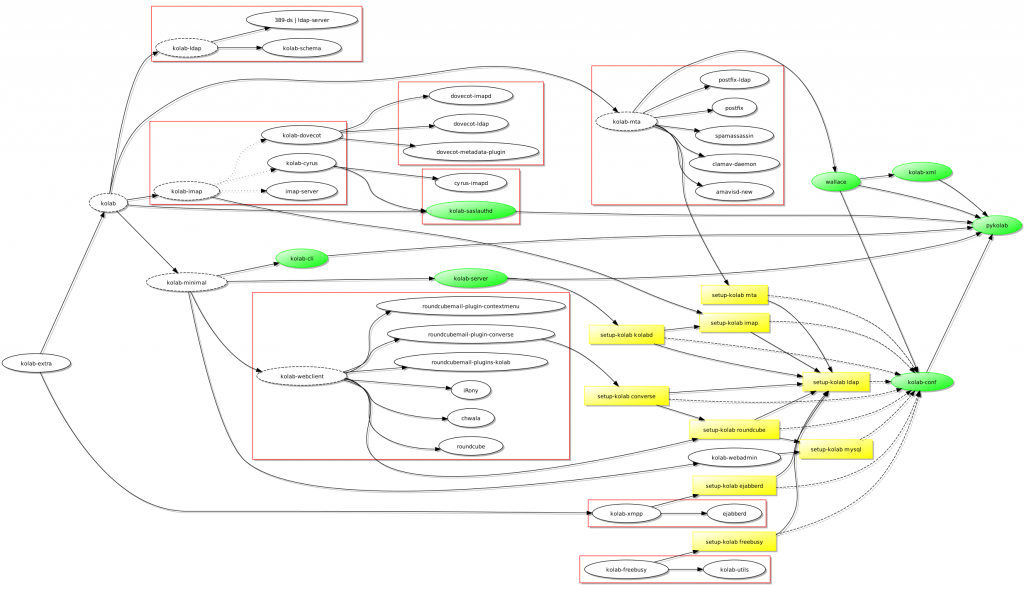

One community that has remained active despite various setbacks is the one pursuing the development of the EOMA-68 modular computing platform. It was precisely this kind of “ODM versus chipset vendor versus Free Software community” circus that prompted the development of an open platform and attempts to reach out and cultivate constructive communications with various silicon vendors. Such vendors, notably Allwinner Technology (in the case of EOMA-68), but also other companies that have previously been open to dialogue, have had the realisation that Free Software is an asset, and that Free Software communities are their partners and not just a bunch of people whose work can be taken and used without paying attention to the terms under which that work was originally shared. Such dialogues are ongoing and are subject to setbacks as well as progress, but it is far better to cultivate good practices than to ignore bad practices and to dump the ugly result onto the end-user.

Now, the Fairphone organisation has started to reconsider the software issue in light of the real possibility that their device will not be upgradeable beyond an old release of Android:

“We are actively looking at ways to achieve this goal, but we’re trying to be realistic and face the fact that the first Fairphones will most likely not be upgraded beyond Android 4.2.”

Given that the viability of devices depends not only on the continued functioning of the hardware but also the correct functioning of the software, and that one motivation that many people have stated for upgrading their phone is to gain access to a supported operating system distribution and/or one that supports applications they need or desire, the unfortunate neglect of software sustainability has undermined the general sustainability of the device. It may very well be the case that the Fairphone organisation’s initiatives around re-use and recycling can mitigate the problems caused by any abandonment of these devices, as people seek out replacements that do what they demand of them, but one of the most potent goals of reducing consumption by providing a long-lasting device has been undermined by something that should be the easiest part of the product to change, maintain, upgrade and even to remedy shortcomings with the chosen physical components; something whose lifespan is dictated far less by physical constraints than the assembly of physical components making up the device itself.

It is quite possible that certain industry practices have remained unknown to the Fairphone organisation, despite bitter experiences elsewhere, and that they are only now catching up with what many other people have learned over the years:

“Our chipset vendor MediaTek is only publicly releasing what it is bound to by the obligatory terms of the GNU public license GPL (the Linux Kernel and a few userland programs) and has chosen not to release any of the Android source code.”

Once again, the GPL demonstrates its worth as a necessary tool to ensure that the end-user remains in control. Unfortunately, Google decided that the often shoddy practices of its hardware and industry partners should be indulged by allowing them to make proprietary products with Google’s permissively-licensed code. It could be worse: some hardware vendors violate the GPL and blame their suppliers, requiring anyone seeking recourse to traverse the supply chain as far as it goes, potentially to some obscure company in a faraway land whose management plead poverty while actually doing very nicely selling their services to anyone willing to pay; others just appear to brazenly violate the GPL and dare someone to sue them.

The Fairphone organisation could have valued the sustainability benefits of Free Software and cooperative hardware vendors. In doing so, by merely asking for informed opinions, they would have avoided this mess entirely. Unfortunately, they may have focused too narrowly only on certain worthy and necessary topics, maintaining an oversimplified view of software that, if mainstream media punditry is to be believed, is merely transient and interchangeable: something that can be made to run on any hardware as if by magic, with each upgrade replacing what was there before with something that is always better, only ever offering improvements and benefits. Those of us with more than a passing knowledge of systems development know that such beliefs are really delusions produced from a lack of experience or a wish to believe that unfamiliar things are easier than they actually are.

Since we cannot go back and change the way things were done before, I suppose that now is the time to deliver on the sustainability promise by fully and properly promoting and supporting Free Software on any future Fairphone device. Which means that the Fairphone organisation has to start listening to people with experience of reliably deploying and supporting Free Software on open or properly-documented hardware, instead of going along with whatever some supplier (and their potentially GPL-violating associates) would have them do just to get the contract in the bag and the device out of the door.