EOMA68: The Campaign (and some remarks about recurring criticisms)

Thursday, August 18th, 2016I have previously written about the EOMA68 initiative and its objective of making small, modular computing cards that conform to a well-defined standard which can be plugged into certain kinds of device – a laptop or desktop computer, or maybe even a tablet or smartphone – providing a way of supplying such devices with the computing power they all need. This would also offer a convenient way of taking your computing environment with you, using it in the kind of device that makes most sense at the time you need to use it, since the computer card is the actual computer and all you are doing is putting it in a different box: switch off, unplug the card, plug it into something else, switch that on, and your “computer” has effectively taken on a different form.

(This “take your desktop with you” by actually taking your computer with you is fundamentally different to various dubious “cloud synchronisation” services that would claim to offer something similar: “now you can synchronise your tablet with your PC!”, or whatever. Such services tend to operate rather imperfectly – storing your files on some remote site – and, of course, exposing you to surveillance and convenience issues.)

Well, a crowd-funding campaign has since been launched to fund a number of EOMA68-related products, with an opportunity for those interested to acquire the first round of computer cards and compatible devices, those devices being a “micro-desktop” that offers a simple “mini PC” solution, together with a somewhat radically designed and produced laptop (or netbook, perhaps) that emphasises accessible construction methods (home 3D printing) and alternative material usage (“eco-friendly plywood”). In the interests of transparency, I will admit that I have pledged for a card and the micro-desktop, albeit via my brother for various personal reasons that also delayed me from actually writing about this here before now.

Of course, EOMA68 is about more than just conveniently taking your computer with you because it is now small enough to fit in a wallet. Even if you do not intend to regularly move your computer card from device to device, it emphasises various sustainability issues such as power consumption (deliberately kept low), long-term support and matters of freedom (the selection of CPUs that completely support Free Software and do not introduce surveillance backdoors), and device longevity (that when the user wants to upgrade, they may easily use the card in something else that might benefit from it).

This is not modularity to prove some irrelevant hypothesis. It is modularity that delivers concrete benefits to users (that they aren’t forced to keep replacing products engineered for obsolescence), to designers and manufacturers (that they can rely on the standard to provide computing functionality and just focus on their own speciality to differentiate their product in more interesting ways), and to society and the environment (by reducing needless consumption and waste caused by the upgrade treadmill promoted by the technology industries over the last few decades).

One might think that such benefits might be received with enthusiasm. Sadly, it says a lot about today’s “needy consumer” culture that instead of welcoming another choice, some would rather spend their time criticising it, often to the point that one might wonder about their motivations for doing so. Below, I present some common criticisms and some of my own remarks.

(If you don’t want to read about “first world” objections – typically about “new” and “fast” – and are already satisfied by the decisions made regarding more understandable concerns – typically involving corporate behaviour and licensing – just skip to the last section.)

“The A20 is so old and slow! What’s the point?”

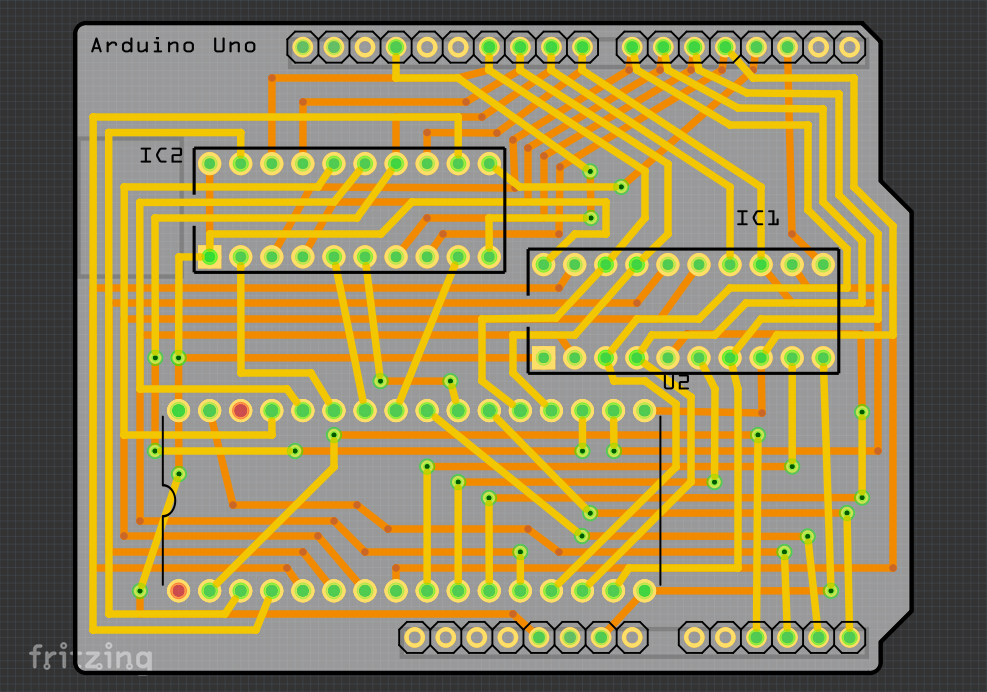

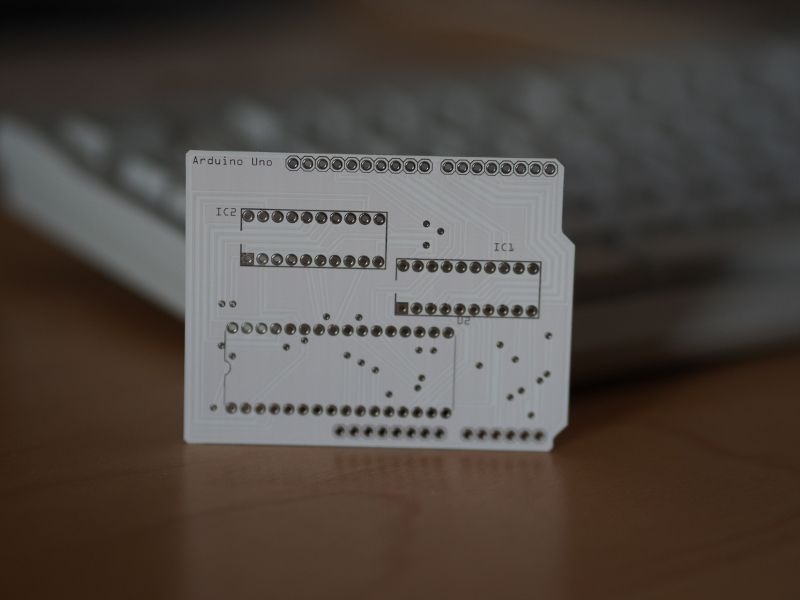

The Allwinner A20 has been around for a while. Indeed, its predecessor – the A10 – was the basis of initial iterations of the computer card several years ago. Now, the amount of engineering needed to upgrade the prototypes that were previously made to use the A10 instead of the A20 is minimal, at least in comparison to adopting another CPU (that would probably require a redesign of the circuit board for the card). And hardware prototyping is expensive, especially when unnecessary design changes have to be made, when they don’t always work out as expected, and when extra rounds of prototypes are then required to get the job done. For an initiative with a limited budget, the A20 makes a lot of sense because it means changing as little as possible, benefiting from the functionality upgrade and keeping the risks low.

Obviously, there are faster processors available now, but as the processor selection criteria illustrate, if you cannot support them properly with Free Software and must potentially rely on binary blobs which potentially violate the GPL, it would be better to stick to a more sustainable choice (because that is what adherence to Free Software is largely about) even if that means accepting reduced performance. In any case, at some point, other cards with different processors will come along and offer faster performance. Alternatively, someone will make a dual-slot product that takes two cards (or even a multi-slot product that provides a kind of mini-cluster), and then with software that is hopefully better-equipped for concurrency, there will be alternative ways of improving the performance to that of finding faster processors and hoping that they meet all the practical and ethical criteria.

“The RasPi 3…”

Lots of people love the Raspberry Pi, it would seem. The original models delivered a cheap, adequate desktop computer for a sum that was competitive even with some microcontroller-based single-board computers that are aimed at electronics projects and not desktop computing, although people probably overlook rivals like the BeagleBoard and variants that would probably have occupied a similar price point even if the Raspberry Pi had never existed. Indeed, the BeagleBone Black resides in the same pricing territory now, as do many other products. It is interesting that both product families are backed by certain semiconductor manufacturers, and the Raspberry Pi appears to benefit from privileged access to Broadcom products and employees that is denied to others seeking to make solutions using the same SoC (system on a chip).

Now, the first Raspberry Pi models were not entirely at the performance level of contemporary desktop solutions, especially by having only 256MB or 512MB RAM, meaning that any desktop experience had to be optimised for the device. Furthermore, they employed an ARM architecture variant that was not fully supported by mainstream GNU/Linux distributions, in particular the one favoured by the initiative: Debian. So a variant of Debian has been concocted to support the devices – Raspbian – and despite the Raspberry Pi 2 being the first device in the series to employ an architecture variant that is fully supported by Debian, Raspbian is still recommended for it and its successor.

Anyway, the Raspberry Pi 3 having 1GB RAM and being several times faster than the earliest models might be more competitive with today’s desktop solutions, at least for modestly-priced products, and perhaps it is faster than products using the A20. But just like the fascination with MHz and GHz until Intel found that it couldn’t rely on routinely turning up the clock speed on its CPUs, or everybody emphasising the number of megapixels their digital camera had until they discovered image noise, such number games ignore other factors: the closed source hardware of the Raspberry Pi boards, the opaque architecture of the Broadcom SoCs with a closed source operating system running on the GPU (graphics processing unit) that has control over the ARM CPU running the user’s programs, the impracticality of repurposing the device for things like laptops (despite people attempting to repurpose it for such things, anyway), and the organisation behind the device seemingly being happy to promote a variety of unethical proprietary software from a variety of unethical vendors who clearly want a piece of the action.

And finally, with all the fuss about how much faster the opaque Broadcom product is than the A20, the Raspberry Pi 3 has half the RAM of the EOMA68-A20 computer card. For certain applications, more RAM is going to be much more helpful than more cores or “64-bit!”, which makes us wonder why the Raspberry Pi 3 doesn’t support 4GB RAM or more. (Indeed, the current trend of 64-bit ARM products offering memory quantities addressable by 32-bit CPUs seems to have missed the motivation for x86 finally going 64-bit back in the early 21st century, which was largely about efficiently supporting the increasingly necessary amounts of RAM required for certain computing tasks, with Intel’s name for x86-64 actually being at one time “Extended Memory 64 Technology“. Even the DEC Alpha, back in the 1990s, which could be regarded as heralding the 64-bit age in mainstream computing, and which arguably relied on the increased performance provided by a 64-bit architecture for its success, still supported 64-bit quantities of memory in delivered products when memory was obviously a lot more expensive than it is now.)

“But the RasPi Zero!”

Sure, who can argue with a $5 (or £4, or whatever) computer with 512MB RAM and a 1GHz CPU that might even be a usable size and shape for some level of repurposing for the kinds of things that EOMA68 aims at: putting a general purpose computer into a wide range of devices? Except that the Raspberry Pi Zero has had persistent availability issues, even ignoring the free give-away with a magazine that had people scuffling in newsagents to buy up all the available copies so they could resell them online at several times the retail price. And it could be perceived as yet another inventory-dumping exercise by Broadcom, given that it uses the same SoC as the original Raspberry Pi.

Arguably, the Raspberry Pi Zero is a more ambiguous follow-on from the Raspberry Pi Compute Module that obviously was (and maybe still is) intended for building into other products. Some people may wonder why the Compute Module wasn’t the same success as the earlier products in the Raspberry Pi line-up. Maybe its lack of success was because any organisation thinking of putting the Compute Module (or, these days, the Pi Zero) in a product to sell to other people is relying on a single vendor. And with that vendor itself relying on a single vendor with whom it currently has a special relationship, a chain of single vendor reliance is formed.

Any organisation wanting to build one of these boards into their product now has to have rather a lot of confidence that the chain will never weaken or break and that at no point will either of those vendors decide that they would rather like to compete in that particular market themselves and exploit their obvious dominance in doing so. And they have to be sure that the Raspberry Pi Foundation doesn’t suddenly decide to get out of the hardware business altogether and pursue those educational objectives that they once emphasised so much instead, or that the Foundation and its manufacturing partners don’t decide for some reason to cease doing business, perhaps selectively, with people building products around their boards.

“Allwinner are GPL violators and will never get my money!”

Sadly, Allwinner have repeatedly delivered GPL-licensed software without providing the corresponding source code, and this practice may even persist to this day. One response to this has referred to the internal politics and organisation of Allwinner and that some factions try to do the right thing while others act in an unenlightened, licence-violating fashion.

Let it be known that I am no fan of the argument that there are lots of departments in companies and that just because some do some bad things doesn’t mean that you should punish the whole company. To this day, Sony does not get my business because of the unsatisfactorily-resolved rootkit scandal and I am hardly alone in taking this position. (It gets brought up regularly on a photography site I tend to visit where tensions often run high between Sony fanatics and those who use cameras from other manufacturers, but to be fair, Sony also has other ways of irritating its customers.) And while people like to claim that Microsoft has changed and is nice to Free Software, even to the point where people refusing to accept this assertion get criticised, it is pretty difficult to accept claims of change and improvement when the company pulls in significant sums from shaking down device manufacturers using dubious patent claims on Android and Linux: systems it contributed nothing to. And no, nobody will have been reading any patents to figure out how to implement parts of Android or Linux, let alone any belonging to some company or other that Microsoft may have “vacuumed up” in an acquisition spree.

So, should the argument be discarded here as well? Even though I am not too happy about Allwinner’s behaviour, there is the consideration that as the saying goes, “beggars cannot be choosers”. When very few CPUs exist that meet the criteria desirable for the initiative, some kind of nasty compromise may have to be made. Personally, I would have preferred to have had the option of the Ingenic jz4775 card that was close to being offered in the campaign, although I have seen signs of Ingenic doing binary-only code drops on certain code-sharing sites, and so they do not necessarily have clean hands, either. But they are actually making the source code for such binaries available elsewhere, however, if you know where to look. Thus it is most likely that they do not really understand the precise obligations of the software licences concerned, as opposed to deliberately withholding the source code.

But it may well be that unlike certain European, American and Japanese companies for whom the familiar regime of corporate accountability allows us to judge a company on any wrongdoing, because any executives unaware of such wrongdoing have been negligent or ineffective at building the proper processes of supervision and thus permit an unethical corporate culture, and any executives aware of such wrongdoing have arguably cultivated an unethical corporate culture themselves, it could be the case that Chinese companies do not necessarily operate (or are regulated) on similar principles. That does not excuse unethical behaviour, but it might at least entertain the idea that by supporting an ethical faction within a company, the unethical factions may be weakened or even eliminated. If that really is how the game is played, of course, and is not just an excuse for finger-pointing where nobody is held to account for anything.

But companies elsewhere should certainly not be looking for a weakening of their accountability structures so as to maintain a similarly convenient situation of corporate hypocrisy: if Sony BMG does something unethical, Sony Imaging should take the bad with the good when they share and exploit the Sony brand; people cannot have things both ways. And Chinese companies should comply with international governance principles, if only to reassure their investors that nasty surprises (and liabilities) do not lie in wait because parts of such businesses were poorly supervised and not held accountable for any unethical activities taking place.

It is up to everyone to make their own decision about this. The policy of the campaign is that the A20 can be supported by Free Software without needing any proprietary software, does not rely on any Allwinner-engineered, licence-violating software (which might be perceived as a good thing), and is merely the first step into a wider endeavour that could be conveniently undertaken with the limited resources available at the time. Later computer cards may ignore Allwinner entirely, especially if the company does not clean up its act, but such cards may never get made if the campaign fails and the wider endeavour never even begins in earnest.

(And I sincerely hope that those who are apparently so outraged by GPL violations actually support organisations seeking to educate and correct companies who commit such violations.)

“You could buy a top-end laptop for that price!”

Sure you could. But this isn’t about a crowd-funding campaign trying to magically compete with an optimised production process that turns out millions of units every year backed by a multi-billion-dollar corporation. It is about highlighting the possibilities of more scalable (down to the economically-viable manufacture of a single unit), more sustainable device design and construction. And by the way, that laptop you were talking about won’t be upgradeable, so when you tire of its performance or if the battery loses its capacity, I suppose you will be disposing of it (hopefully responsibly) and presumably buying something similarly new and shiny by today’s measures.

Meanwhile, with EOMA68, the computing part of the supposedly overpriced laptop will be upgradeable, and with sensible device design the battery (and maybe other things) will be replaceable, too. Over time, EOMA68 solutions should be competitive on price, anyway, because larger numbers of them will be produced, but unlike traditional products, the increased usable lifespans of EOMA68 solutions will also offer longer-term savings to their purchasers, too.

“You could just buy a used laptop instead!”

Sure you could. At some point you will need to be buying a very old laptop just to have a CPU without a surveillance engine and offering some level of upgrade potential, although the specification might be disappointing to you. Even worse, things don’t last forever, particularly batteries and certain kinds of electronic components. Replacing those things may well be a challenge, and although it is worthwhile to make sure things get reused rather than immediately discarded, you can’t rely on picking up a particular product in the second-hand market forever. And relying on sourcing second-hand items is very much for limited edition products, whereas the EOMA68 initiative is meant to be concerned with reliably producing widely-available products.

“Why pay more for ideological purity?”

Firstly, words like “ideology”, “religion”, “church”, and so on, might be useful terms for trolls to poison and polarise any discussion, but does anyone not see that expecting suspiciously cheap, increasingly capable products to be delivered in an almost conveyor belt fashion is itself subscribing to an ideology? One that mandates that resources should be procured at the minimum cost and processed and assembled at the minimum cost, preferably without knowing too much about the human rights abuses at each step. Where everybody involved is threatened that at any time their role may be taken over by someone offering the same thing for less. And where a culture of exploitation towards those doing the work grows, perpetuating increasing wealth inequality because those offering the services in question will just lean harder on the workers to meet their cost target (while they skim off “their share” for having facilitated the deal). Meanwhile, no-one buying the product wants to know “how the sausage is made”. That sounds like an ideology to me: one of neoliberalism combined with feigned ignorance of the damage it does.

Anyway, people pay for more sustainable, more ethical products all the time. While the wilfully ignorant may jeer that they could just buy what they regard as the same thing for less (usually being unaware of factors like quality, never mind how these things get made), more sensible people see that the extra they pay provides the basis for a fairer, better society and higher-quality goods.

“There’s no point to such modularity!”

People argue this alongside the assertion that systems are easy to upgrade and that they can independently upgrade the RAM and CPU in their desktop tower system or whatever, although they usually start off by talking about laptops, but clearly not the kind of “welded shut” laptops that they or maybe others would apparently prefer to buy (see above). But systems are getting harder to upgrade, particularly portable systems like laptops, tablets, smartphones (with Fairphone 2 being a rare exception of being something that might be upgradeable), and even upgradeable systems are not typically upgraded by most end-users: they may only manage to do so by enlisting the help of more knowledgeable relatives and friends.

I use a 32-bit system that is over 11 years old. It could have more RAM, and I could do the job of upgrading it, but guess how much I would be upgrading it to: 2GB, which is as much as is supported by the two prototyped 32-bit architecture EOMA68 computer card designs (A20 and jz4775). Only certain 32-bit systems actually support more RAM, mostly because it requires the use of relatively exotic architectural features that a lot of software doesn’t support. As for the CPU, there is no sensible upgrade path even if I were sure that I could remove the CPU without causing damage to it or the board. Now, 64-bit systems might offer more options, and in upgradeable desktop systems more RAM might be added, but it still relies on what the chipset was designed to support. Some chipsets may limit upgrades based on either manufacturer pessimism (no-one will be able to afford larger amounts in the near future) or manufacturer cynicism (no-one will upgrade to our next product if they can keep adding more RAM).

EOMA68 makes a trade-off in order to support the upgrading of devices in a way that should be accessible to people who are not experts: no-one should be dealing with circuit boards and memory modules. People who think hardware engineering has nothing to do with compromises should get out of their armchair, join one of the big corporations already doing hardware, and show them how it is done, because I am sure those companies would appreciate such market-dominating insight.

Back to the Campaign

But really, the criticisms are not the things to focus on here. Maybe EOMA68 was interesting to you and then you read one of these criticisms somewhere and started to wonder about whether it is a good idea to support the initiative after all. Now, at least you have another perspective on them, albeit from someone who actually believes that EOMA68 provides an interesting and credible way forward for sustainable technology products.

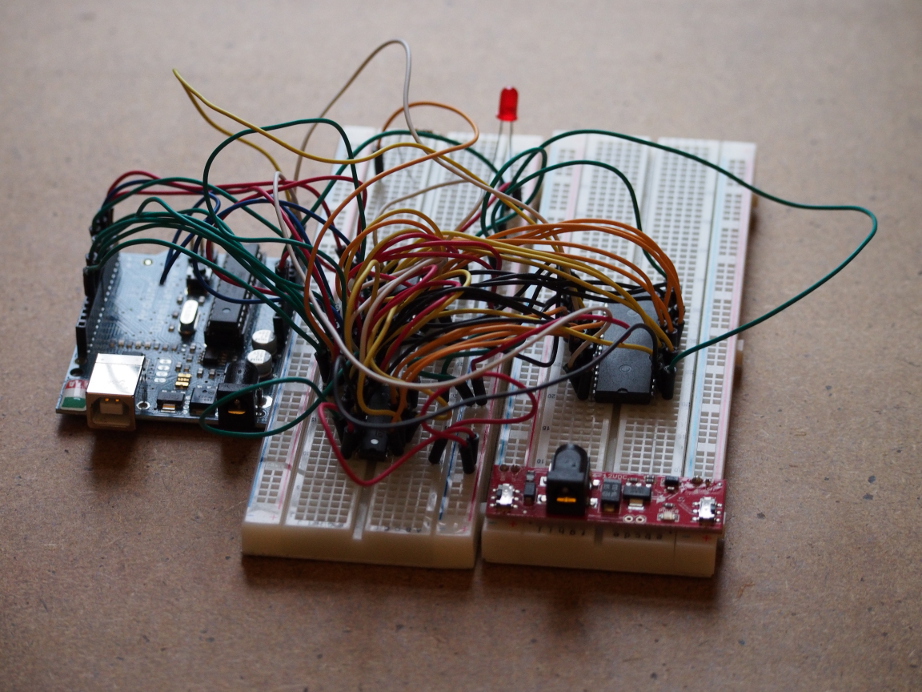

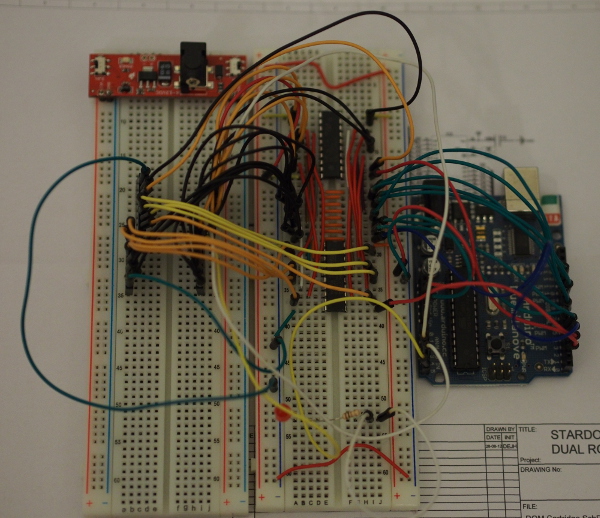

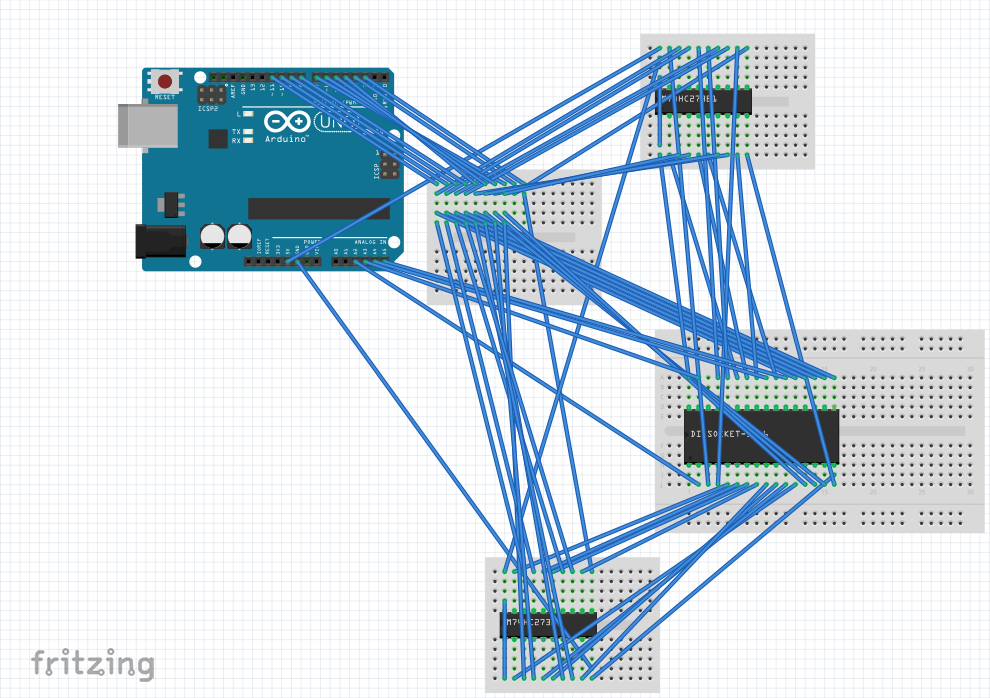

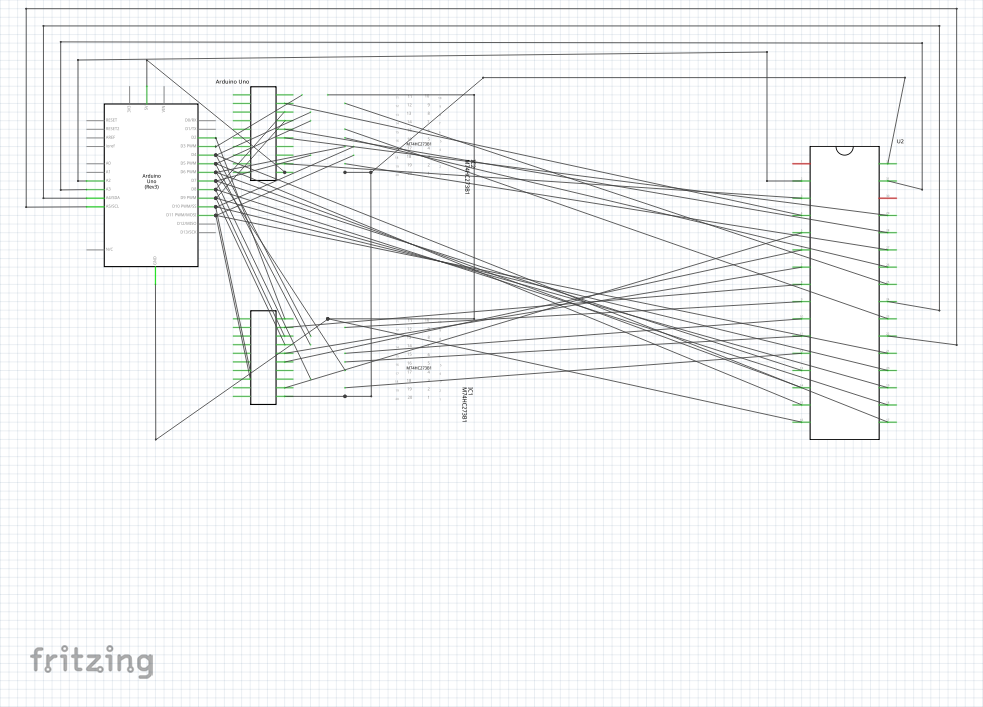

Certainly, this campaign is not for everyone. Above all else it is crowd-funding: you are pledging for rewards, not buying things, even though the aim is to actually manufacture and ship real products to those who have pledged for them. Some crowd-funding exercises never deliver anything because they underestimate the difficulties of doing so, leaving a horde of angry backers with nothing to show for their money. I cannot make any guarantees here, but given that prototypes have been made over the last few years, that videos have been produced with a charming informality that would surely leave no-one seriously believing that “the whole thing was just rendered” (which tends to happen a lot with other campaigns), and given the initiative founder’s stubbornness not to give up, I have a lot of confidence in him to make good on his plans.

(A lot of campaigns underestimate the logistics and, having managed to deliver a complicated technological product, fail to manage the apparently simple matter of “postage”, infuriating their backers by being unable to get packages sent to all the different countries involved. My impression is that logistics expertise is what Crowd Supply brings to the table, and it really surprises me that established freight and logistics companies aren’t dipping their toes in the crowd-funding market themselves, either by running their own services or taking ownership stakes and integrating their services into such businesses.)

Personally, I think that $65 for a computer card that actually has more RAM than most single-board computers is actually a reasonable price, but I can understand that some of the other rewards seem a bit more expensive than one might have hoped. But these are effectively “limited edition” prices, and the aim of the exercise is not to merely make some things, get them for the clique of backers, and then never do anything like this ever again. Rather, the aim is to demonstrate that such products can be delivered, develop a market for them where the quantities involved will be greater, and thus be able to increase the competitiveness of the pricing, iterating on this hopefully successful formula. People are backing a standard and a concept, with the benefit of actually getting some hardware in return.

Interestingly, one priority of the campaign has been to seek the FSF’s “Respects Your Freedom” (RYF) endorsement. There is already plenty of hardware that employs proprietary software at some level, leaving the user to merely wonder what some “binary blob” actually does. Here, with one of the software distributions for the computer card, all of the software used on the card and the policies of the GNU/Linux distribution concerned – a surprisingly awkward obstacle – will seek to meet the FSF’s criteria. Thus, the “Libre Tea” card will hopefully be one of the first general purpose computing solutions to actually be designed for RYF certification and to obtain it, too.

The campaign runs until August 26th and has over a thousand pledges. If nothing else, go and take a look at the details and the updates, with the latter providing lots of background including video evidence of how the software offerings have evolved over the course of the campaign. And even if it’s not for you, maybe people you know might appreciate hearing about it, even if only to follow the action and to see how crowd-funding campaigns are done.